How big of a role does testing play in ensuring something of this magnitude never happens again?

So, what went wrong in the first place?

Allowing dynamic changes to kernel drivers without re-certification

New updates around the infamous outage are revealed every day. However, analysis of the issue points to one root cause worth sharing:

A null pointer error, or nullpointerexception, happens when data at a particular "address" (pointer) has been mishandled. It's either blank (null), or the location it's pointing to is blank. When this happens in the kernel layer, Windows immediately halts the whole system to avoid corrupting any other data.

This tends to only happen if zero testing is involved. If they had installed the update on any Windows machine and powered it on, the issue would have revealed itself.

Also, what happened seems to be deeper than just the Windows kernel. It was third-party software that the Windows kernel granted access to, exposing its inner parts. Think of someone having direct access to your brain without having a conversation with you first. It’s quite shocking that a dependency of a dependency could have such ramifications.

No phased rollout and no rollback strategy

Clearly, this was a massive lack of quality assurance and testing processes. Even if there was no testing, there should've at least been a rollback strategy. Having a rollback strategy or plan allows you to identify a bug, roll it back, and address it before it gets out of hand. There also should've been some sort of phased rollout to a small subset of people to prevent a failure at this scale.

Honestly, even rolling out internally to a private internal environment of a few different types of virtual machines would’ve been a safer choice. Any changes could be incrementally shipped out to higher-level environments that only developers and quality assurance teams have access to. This means more experienced people get to see the code first, increasing the likelihood of finding bugs before it's too late.

To be fair, maybe a rollback wasn't even possible because this update was at the kernel level. For anyone new to computer science, when you power on the motherboard, the CPU wakes up and says, "Hey, I'm a computer!" After checking the memory, the hard drive, and other things, it then hands everything over to the system. The operating system says, “Okay, great all the hardware checks out, let’s launch Windows.” With this update, that’s the point at which the machine dies–it can’t finish booting up Windows. Windows had to have been running for a rollback to be possible—so, a rollback was out of the question.

This is even more reason to manage your risks extra carefully and test, test, test.

Is it safe to say that simply testing could've prevented this?

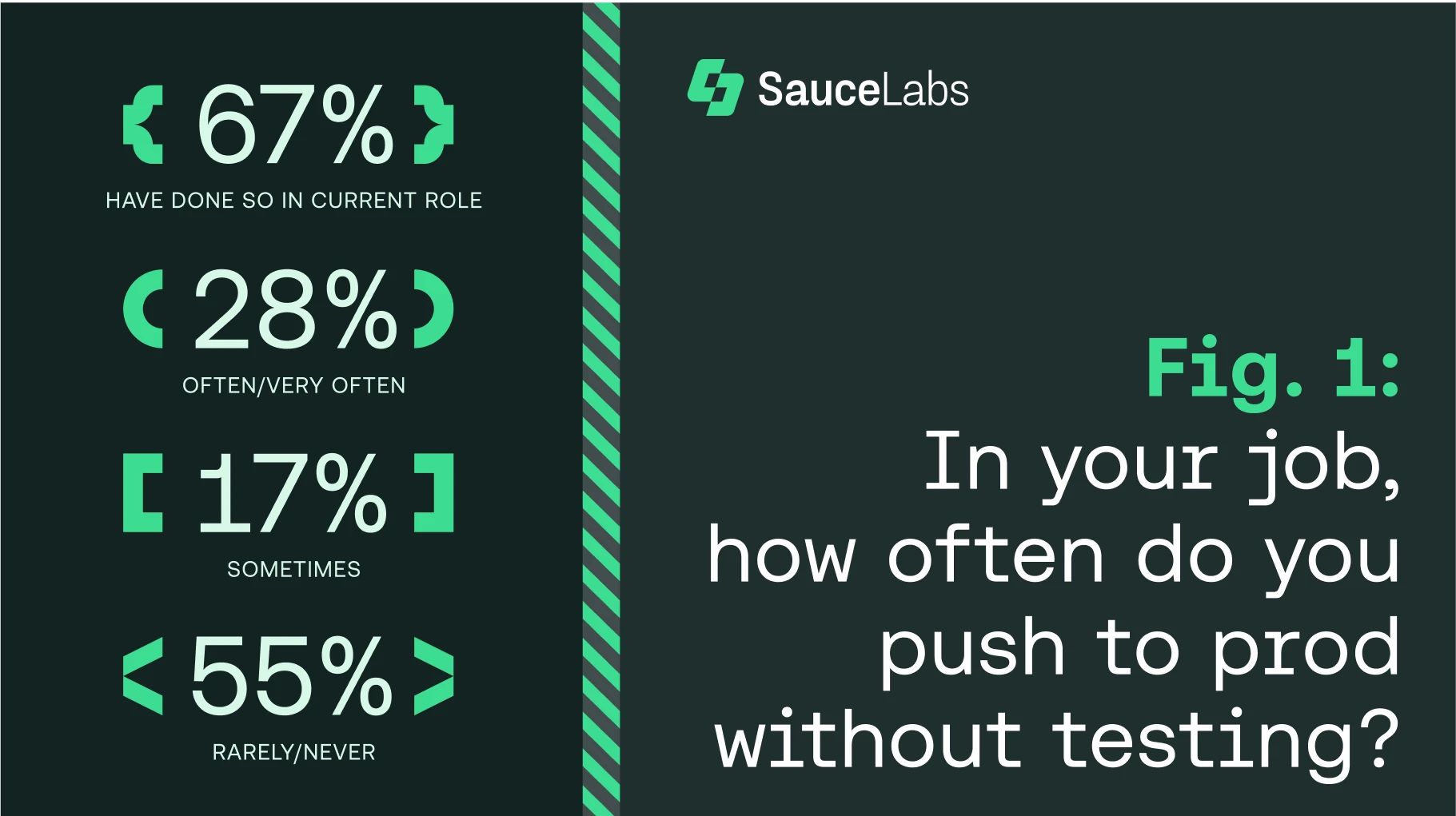

Last year, Sauce Labs published a report called Developers Behaving Badly asking respondents how often they push to production without testing. 67% of respondents said that they do, which seems like an exceptionally high number.

As developers, is this a realistic number?

Sauce Labs Developers Behaving Badly Report 2023

Again, we’re only discussing what we know, but it’s hard to escape the notion that there was a lack of testing. Any computer configured a certain way with a certain OS that has undergone an update and subsequently died means there was a significant lack of testing. To play devil’s advocate, the developer or developers behind the “update” may have had some modified version of a Windows OS running in their test lab.

However, the inescapable conclusion remains: they did not test it in a real-world environment before rolling it out.

Again, what happens serves as an incredible learning opportunity for all of us.

“Speaking for myself, if I’m quickly developing some software or prototyping, I don’t always write tests, or test much, in general. [As a developer], you just develop, see if it works, and keep iterating on it. But this also perpetuates bad behavior because it becomes normalized. It’s important to think about how your changes will impact an entire functioning system. [In the case of the update], it’s my feeling that this behavior has probably existed for a while and the system kept growing bigger and bigger and became a big 'oops'.”

Nikolay Advolodkin

After running many tests, it's common to find something wrong at the last minute. You think it'll be easy to fix, but you don't always think about the consequences, and how even the smallest change will affect everything else. It’s quite possible it was a working update and at the last minute, one little tweak broke everything.

It’s amazing-- this is what we expected on Y2K, only we got it 24 years late, and in a completely different form than expected. Even though this is probably the world’s first 11-figure bug, we got off easy compared to what it could’ve been had this been something with malicious intent.

Top 5 ways to not become the cause of the next global outage:

Gradual Rollout - Allow X amount of users to opt into a canary release strategy where they only test on systems that are not going to cause serious harm if they go down and allow them to get the first update. If the update operates for X amount of time (this is where an SRE team can be particularly beneficial), then the next tier of folks get the update, and so on until the update is worldwide. You would not allow a hospital, for instance, to opt into this. There's way too much at stake. But a small e-commerce site might be okay. You could create a program that businesses can opt into for free if they're comfortable with taking on a certain amount of risk.

Integration Acceptance Tests - The recent catastrophic update was probably a failure to test integration: failing to connect one software with another software, not knowing if they're even compatible in the first place. Acceptance user tests are great for this sort of thing.

“In my career working in the browser and mobile space,” Nikolay Advolodkin begins, “That’s where I see many problems happening – at the integration layer. Software that doesn't work well with other software, or different microservices that don't work well together, can cause many problems."Memory Management - “One of the first things I learned as a junior developer is that memory allocation problems and NullPointerExceptions are always your fault." Marcus shares with Test Case Scenario. If you’re getting those anywhere it should be one giant red flag on the quality of the code. If you’re letting them go (which happens often), then you’re announcing to the world ‘I’m okay with being sloppy’. There may have been a memory leak or nullpointerexception. This would be a software bug and a failure of logic in the code, not a simple mistake.

Cross-Platform Testing - Test across different browsers and mobile platforms to ensure compatibility.

Lean on your SLAS for guidance - It's important to understand not only what you’re disclosing to users and what they depend on from your software, but also what your software is depending on. For example, the Log4J vulnerability that occurred two or three years ago took many companies by surprise. It’s amazing how much software depended on the Java library. What’s even scarier is the vulnerability was in Log4j for years, meaning things could’ve been much worse. Luckily, it was patched before it could cause too much damage. It did, however, cause lingering mistrust for open source. This is why it’s crucial to be aware of your terms of service and disclose dependencies to your users.

Always put your customers first

Let your dependencies and their dependencies set the standard. You can bet money several of the core operating systems at the heart of the systems affected by the ‘update’ had a dependency that would eventually cause this. People need to understand what they’re signing up for.

What is the breaking point? When will people care? Maybe we’ve already experienced the breaking point.

Tune into the full discussion with our technical experts here: