Imagine you're a chef with a signature dish that must taste perfect every time, whether cooked on a gas stove, an electric burner, or even over a campfire. This culinary challenge is akin to the world of cross-platform testing in software development.

In our digitally diverse world, applications are no longer confined to a single platform. They live in an ecosystem of varying hardware and software configurations. For businesses to serve up delightful user experiences, it's crucial that their apps perform seamlessly across the platforms their customers use.

This is where cross-platform testing takes center stage. It's an indispensable ingredient in the recipe of modern end-to-end software testing strategies. By simulating a smorgasbord of devices, operating systems, browsers and other platforms, cross-platform testing ensures that applications not only meet performance and usability standards but also retain their flavor regardless of where they're served. Testing across these varied platforms is like taste-testing our dish on different stoves – it helps pinpoint and rectify platform-specific quirks early in the cooking process, saving businesses from the indigestion of costly post-release fixes.

Below, we'll explore what cross-platform testing really entails, how it's executed, its significance in the digital world, and tips to master the art of this essential testing process.

What Is Cross-Platform Testing?

Cross-platform testing is the practice of testing software across multiple platforms to ensure a seamless user experience. In this context, a platform is a specific hardware and/or software environment where applications can run.

Examples of platform types that are relevant for cross-platform testing include:

Hardware devices: Some apps need to support multiple types of hardware devices – such as ARM-based and x86-based servers, or different types of mobile phones.

Operating systems: If you build an app that needs to run on Windows, Linux, and macOS, for example, you'd want to test it across all of these OS platforms.

Browsers: Browser-based apps need to function properly in any browser – Firefox, Chrome, Edge, and so on – that might host them.

This is not an exhaustive list; in complex situations, cross-platform testing might also extend to testing applications across different virtualization hypervisors, container orchestration tools, and so on, since these can also serve as the basis for distinct application hosting environments. But for most consumer-oriented apps, testing on different types of hardware devices, operating systems, and browsers is usually the main focus.

Why Is Cross-Platform Testing Important?

The main reason why cross-platform testing is important is simple: it helps guarantee that applications behave as required regardless of which platform hosts them. All-in-one testing platforms like Sauce Labs Platform For Test make it easier to ensure applications are functioning across different browsers, devices and operating systems.

To explain what that means in detail, let's step back and talk about how the platform that hosts an app can impact the way the app functions. Although developers typically design apps with the goal of having them provide the same features, level of performance, and reliability no matter where they are hosted, problems unique to hosting platforms can sometimes lead to unexpected application issues.

For example, differences in the way that various types of Web browsers interpret and render HTML and CSS code could cause the interface for a Web application to look different depending on which browser users use to connect to it. Buttons or images might not appear where they are supposed to, for instance, or some text could be garbled.

By testing applications on multiple browsers, developers and QA engineers would be able to catch any issues related to how the interface is rendered. In turn, they can fix those issues before pushing the application out to end-users.

Without cross-platform testing processes in place, development and QA teams would test their software under only one type of platform or configuration. As a result, they would risk overlooking problems that occur only when the app runs on certain types of platforms. Users might report bugs to them once they deploy the app into production, but finding those bugs prior to deployment via cross-platform testing is preferable to having end-users experience the problems when the apps are in real-world use.

How Does Cross-Platform Testing Work?

When it comes to approaches and tools, cross-platform testing can be carried out in many ways. Teams can use both automated and manual or live testing, or a combination of both. They can also employ any test automation framework, such as Selenium or Appium, as long as it supports whichever platforms they want to test on. As long as tests occur on multiple platforms, cross-platform testing is happening.

That said, regardless of which tools you choose, the process for running cross-platform tests usually looks like this:

Identity which platforms to test on: It's often not possible to test on every possible platform that customers might use. For example, because there are tens of thousands of different mobile device types, you can't realistically test on each one. You should instead identify which platforms are most popular among your users and prioritize those for testing.

Write tests: If you're automating at least some of your tests, you'll need to write the tests.

Deploy your tests across platforms: With tests written, you can run them on whichever platforms you want to test on.

Collect and review test results: If the same test fails on one platform but not another, you likely have a problem that is platform-dependent.

Fix and retest: After addressing platform-specific bugs, run your tests again to ensure they were properly fixed – and that developers didn't accidentally introduce any new issues during the remediation process.

How to Set Up Different Platforms for Testing

In many cases, the biggest challenge isn’t deciding which platforms to test on or writing automated tests – it's obtaining access to multiple platforms to host tests. Unless your team maintains a collection of different types of hardware devices, operating systems, and browsers, you may not have sufficient test environments on hand to cover all of the platforms you need to support.

This challenge tends to be especially acute in the context of cross-platform mobile device testing because most development and QA teams don't have dozens or hundreds of mobile devices sitting around their offices for testing purposes. And while emulators and simulators (which provide virtual environments where tests can run) can help mitigate this issue, some tests require real devices to deliver the greatest levels of accuracy. Therefore, emulators and simulators aren’t always the right answer.

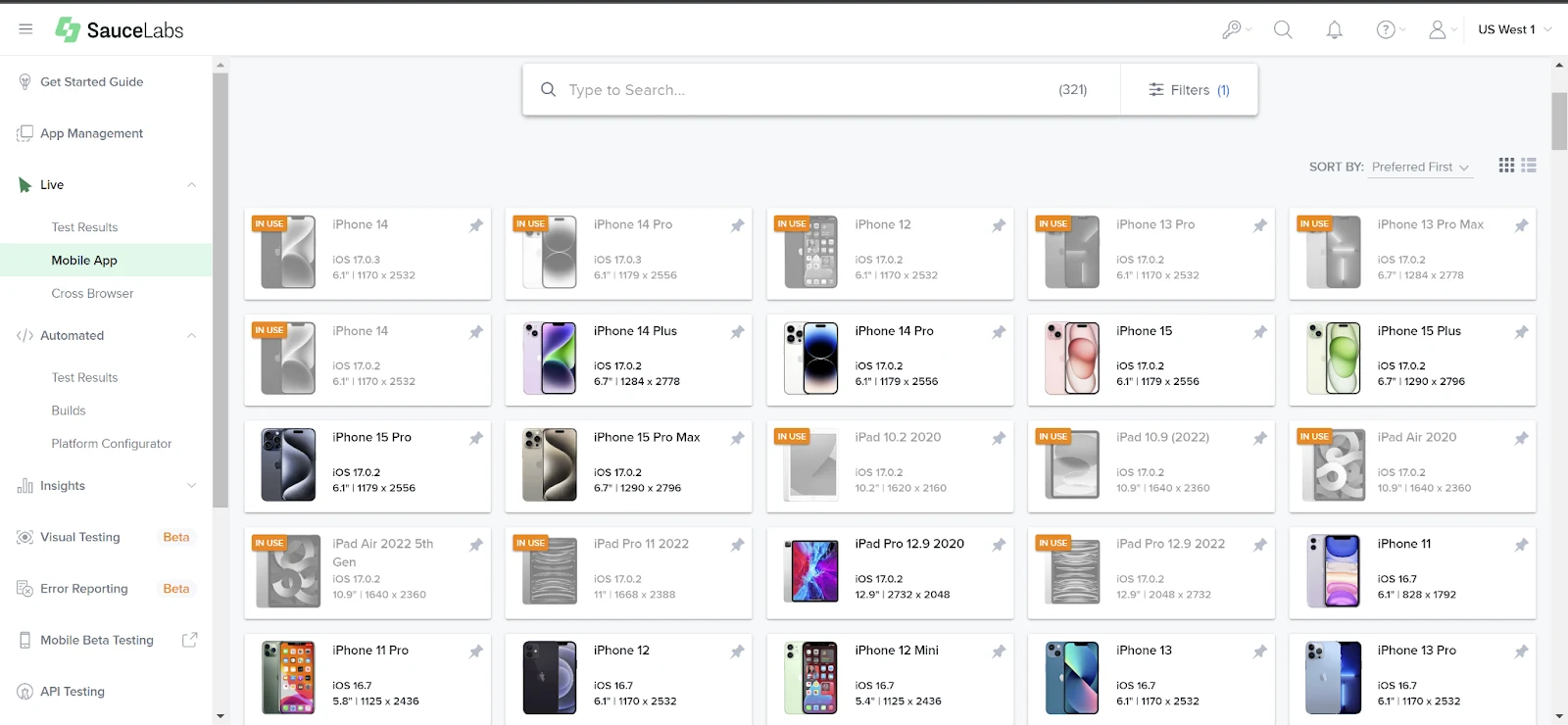

To solve this challenge, teams often turn to software testing cloud platforms like Sauce Labs. Sauce provides access to a wide selection of hardware devices where teams can run tests. It also enables simple cross-browser testing so that engineers can test apps on any browsers they need to support.

Using this approach, developers and QA engineers can easily obtain access to multiple platforms for testing purposes, without having to set up and manage the platforms themselves.

Cross-Platform Testing Best Practices

As long as you're running tests across multiple platforms, you've taken the most important step toward ensuring that you deliver a great end-user experience no matter which devices, operating systems, and/or browsers your customers choose to use. But to make the cross-platform testing process even more effective, consider the following best practices:

Choose platforms strategically: As we mentioned, it's usually not practical to test on every possible platform that customers could be using. Instead, make strategic choices about which platforms to test on by collecting data about which platforms most of your users are running or are likely to use. One way to do this is to look at demographic data about platform trends and align them with your user base; for example, iOS vs. Android usage rates vary between people of different ages. You can also collect data from your production apps, if you include logic in them to report which platforms they are deployed on.

Update platform selections regularly: The platforms that are most popular among your customers are likely to change over time. For that reason, you'll want to update the platforms you test on regularly. If you haven't changed your platform list in several years, you may be overlooking important platforms.

Test under multiple platform configurations: In addition to testing on multiple platforms, it's sometimes necessary to test under different configurations on the same platform. For example, you may want to run your tests under different screen resolutions on the same platform to check for platform-dependent bugs that only occur under certain resolutions.

Use emulators and simulators selectively: Emulators and simulators can help to provide access to multiple testing platforms using virtualization. But tests that run in virtual environments don't always fully replicate application behavior on real devices, so you should use emulators and simulators with caution. Testing in virtual environments is better than not testing on key platforms at all, but testing on real devices whenever possible is typically preferable due to increased test reliability.

Getting the Most from Cross-Platform Testing

If your customers use more than one platform to run your software, cross-platform testing is critical for ensuring you don't overlook bugs that could impact customers. And although it requires a little more effort than testing on just one platform, it's not that much harder – especially with the help of a testing cloud, like Sauce Labs, that provides easy access to any and all platforms that teams want to include in their testing strategy.