Why does my fridge have an app? How could a software update break a device that was working before? Why does a bug in one end of the supply chain create a domino effect that causes flight delays?

Marc Andreessen told us software was eating the world back in 2011, but he didn’t predict how much indigestion the world would have in the process.

In a previous article, Everything Is Software and Everything Is Broken, I argued, as the title implies, that software is more often broken or faulty today than it has ever been before.

The article resonated with people, but I also received some welcome feedback. In particular, Jason Huggins, co-founder of Selenium, argued that things like his dehumidifier breaking were much more disruptive to his life than software breaking.

“Maybe I’ll concede that things are broken,” Huggins said, “But I would disagree on the severity. I would argue that something that was broken and then also killed people – I would be in agreement on that.” But if it’s Twitter crashing, he said, then it’s just not a big deal.

In this article, I want to advance the argument by agreeing that, yes, software breakages – in isolation – are not necessarily severe. The severity of the problem, for me, isn’t a result of how broken software is or how often software is faulty. The risk is that software is increasingly involved in everything we touch, so the more fragile software is, the more fragile the world is.

Discussions of “software quality” often tend toward abstraction, so here, I’d like to make it more concrete. Let’s explore software breakages through the lens of impact: If software is everywhere, what does that mean when software breaks?

The net rate of software failure has risen

Non-technical end-users and skilled developers often have the same complaint: Everything has an app these days – whether it’s necessary or not. (And if not a literal app, a software feature or layer that dictates how you interact with the product).

From a product development perspective, this is simply added value. A dehumidifier with a digital screen and associated app can show much more information than an interface with a limited number of dials. The company can also update as necessary, keeping older devices in line with new developments.

For end-users, however, additional software often feels like a bad tradeoff. Many of these features feel extraneous, and the benefits are often outweighed when the software breaks or malfunctions, making an otherwise working product inoperable.

In Everything Is Software and Everything Is Broken, I argued that we shouldn’t assume software quality is rising in a linear and predictable fashion. In some ways and certain contexts, software quality is worse than it once was.

But this article missed a nuance: There’s a good possibility that the absolute rate of software failure has dropped but that the net rate of software failure has risen.

Testing is one of the biggest causes of software quality increasing. I always think about Tim Bray’s article on Testing In The Twenties, where he quotes Gerald Weinberg’s saying, “If builders built buildings the way programmers wrote programs, then the first woodpecker that came along would destroy civilization,” and argues that because of testing, “In the builders-and-programmers metaphor, civilization need not fear woodpeckers.”

With a clear before-and-after, in this case, before we tested and after we started testing as a precondition to deployment, it’s hard to dispute that software quality and reliability have risen. Software often feels more faulty, however, because the net rate of software failure has potentially risen—despite other trends.

We can see signs of this in research. In our Every Experience Counts report, for example, 55% of people report engaging in digital experiences more than 20 times per month (demonstrating how widespread software features are) and 45% report that things malfunction sometimes, often, or always (demonstrating how fragile these features can make the world).

Software has eaten the world, and now, it’s everywhere – in our cars, lightbulbs, airplanes, fridges, speakers, and more. And, of course, referring to software as a unitary thing is illusory. Every feature is connected to a web of open-source components and libraries as well as a countless number of vendor products – all of which can break.

This leads me to a complementary argument to the previous article: Everything has software, so everything will break.

Five ways software fragility affects the material world

There are many ways software can break, but if the breakage only affects our ability to use the software itself, it doesn’t always feel consequential. But the more we attach software to other things, the more likely those failures will matter, and the more often those consequences will be direct, immediate, and material.

This relatively brief tour is demonstrative, not exhaustive. Throughout, you’ll see a theme: Software features only sometimes benefit end-users, but software always provides new ways for the product to fail—sometimes catastrophically.

1. Feature (and fragility) creep

The most obvious way we see the intrusion of software (and see software as an intrusion) is through “appification” and IoT. Appification refers to the addition of often unnecessary apps and to the shift from web-based interfaces to app-based ones. IoT (Internet of Things) refers to a network of software-embedded, internet-connected devices.

In both cases, we often (but not always) see the introduction of unnecessary features that help companies market a new product but don’t always make it better. When companies add software, they not only get to market the addition of the software itself but also market every upgrade (as made possible through the software).

Over time, feature creep means that every additional software feature is less likely to benefit the user and more likely to be marketing fodder. Eventually, devices that once worked well without software can become overloaded with software features. However, because these features are embedded in devices that we depend on, software failures can cause device failures, which really can be disruptive.

In 2019, for example, a Google Cloud outage caused issues across a range of its websites and devices. On the one hand, many Google sites, including Gmail and YouTube, became slow or inoperable – not the worst problem.

On the other hand, the outage also affected all of Google’s Nest products, meaning many people couldn’t use their thermostats, smart locks, and baby cameras. The fact that these devices connected to the Internet provided convenience, but the sudden disappearance of this functionality, which is not the fault of any owner nor predictable by them, shows the fragility.

Similarly, also in 2019, Tesla’s app went down for hours, leaving many drivers stranded. The drivers could have kept the manual key fobs Tesla offers, which would have worked, but we again see the tradeoff: Software provides new, more convenient functionality but then makes things more inconvenient than before when it breaks.

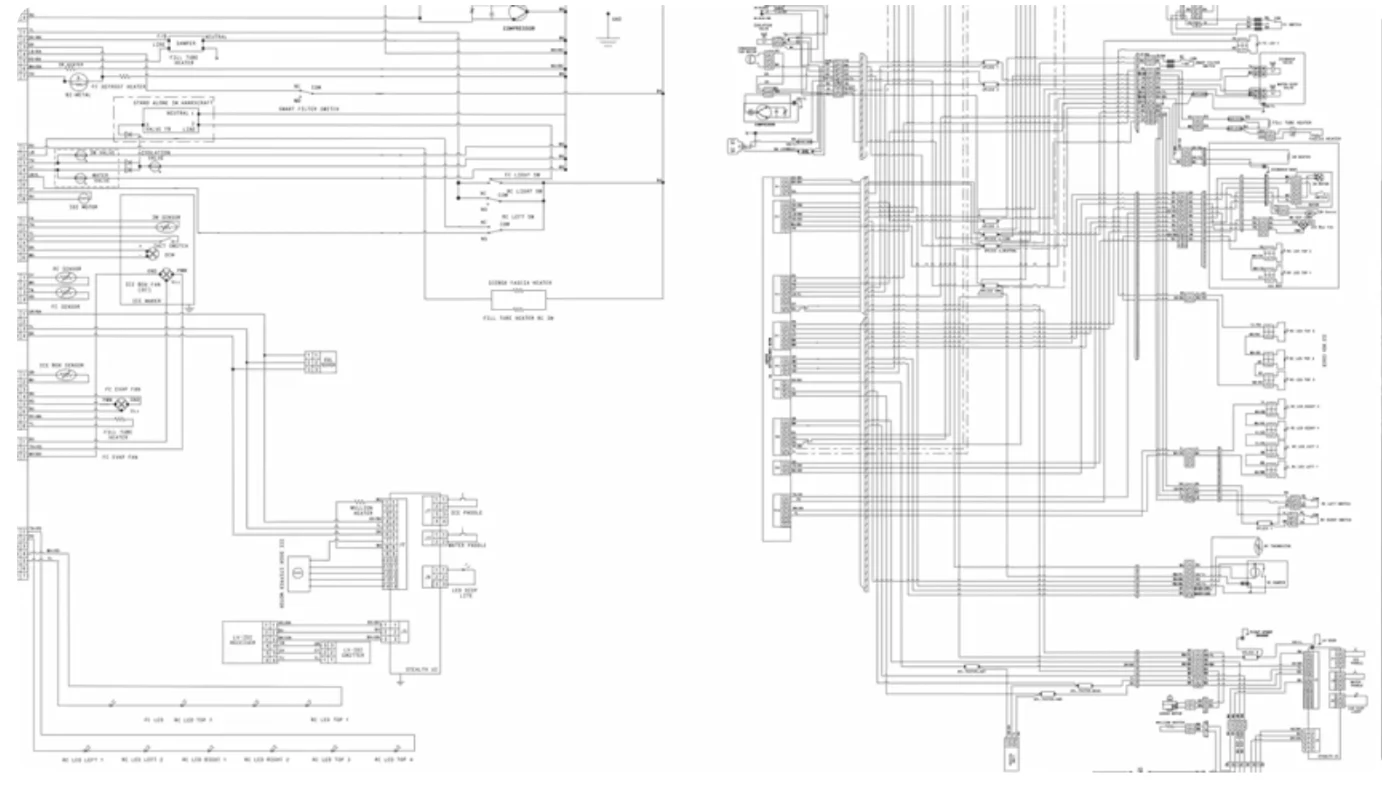

That’s why, even when people are buying things as mundane as fridges, experts often recommend consumers avoid products that include software. In one video, for example, an appliance expert shares a table-sized diagram for a standard fridge and argues that complexity creates more drawbacks than benefits.

In this context, software is added complexity first and foremost. Software is more difficult to troubleshoot than, say, a jammed ice box, but when it fails, the entire device can become dysfunctional.

2. Upgrade cycles and downgrades in quality

With SaaS, the software industry gained the ability to iterate and update software rapidly over the air. With an adjacent rise in testing, companies were able to deploy software, rapidly test and iterate, and improve it over time.

This is good in theory and is often good in practice, but there are many cases where updates can make things worse, and formerly good products can suddenly start failing.

Again, a tradeoff: Having a product that can improve automatically and wirelessly is undoubtedly good, but the discomfort of holding a functional device that suddenly becomes nonfunctional is distinctly uncomfortable.

In 2024, for example, Spotify announced it would not only discontinue producing its Car Thing device but that it would make all existing devices nonfunctional. Many users were outraged, and eventually, Spotify offered refunds.

It’s worth emphasizing that these users purchased the devices, and the devices worked, but because they were connected to Spotify, the company was able to unilaterally shut them down. This kind of failure is unique to software and unique to an era of software-connected devices.

This isn’t the only instance: In 2023, Amazon announced a new edition of its Echo Show device that would display photos on the home screen for a monthly subscription fee. In 2024, Amazon discontinued the feature, and the people who bought these devices – for this feature! – are now stuck seeing ads.

In both cases, software enabled some potentially greedy business intentions, but good intentions can also cause issues.

In 2024, Sonos released a new app that made customers unhappy because it had tons of bugs and regressions. Many previously functional Sonos devices became harder to use or entirely inoperable. On an earnings call, the Sonos CEO even admitted the app's reputation was hurting sales, evidenced by how upset former Sonos fans were

(Source)

The quality of this release was clearly not intentional. In a Reddit AMA, the CEO told unhappy users that simply reverting to the previous app wasn’t possible.

“Sonos is not just the mobile app,” he wrote, “But software that runs on your speakers and in the cloud, too. In the months since the new mobile app launched, we’ve been updating the software that runs on our speakers and in the cloud to the point where today S2 is less reliable & less stable than what you remember. After doing extensive testing, we’ve reluctantly concluded that re-releasing S2 would make the problems worse, not better.”

Good intentions or not, bad software can break devices in the material world, and as a result, the advantages of a software-enabled device can start to feel more like disadvantages.

3. Supply chain domino effects

I couldn’t write this article without mentioning CrowdStrike. 2024, we saw what many deemed the world’s biggest IT outage when a bug in CrowdStrike’s testing software failed to validate an update that broke its Falcon software across millions of Windows machines.

The bug caused over $5 billion in damages (so far) because CrowdStrike’s software is part of so many machines (via Windows), and Windows is part of so many products and systems that depend on it.

Thanks to software (and the way one software feature connects each device dependent on that feature to a web of components, vendors, and other products), a failure at one end of the chain was able to cause disasters on the other end of the chain. Worse, as we saw when United, which faced three days of delays and Delta with five, the people on the other end of the chain might be completely unable to troubleshoot.

(Source)

The scale of this incident, however, shouldn’t lead us to assume it’s isolated or otherwise unique. Back in 2016, for example, an open-source developer unpublished numerous NPM-managed modules, including left-pad – a module that was fetched 2,486,696 times in a single month. The code itself was simple, but so many products depended on it that its sudden erasure was disastrous.

Eventually, NPM “un-unpublished” the code, but the point remains: Software connects to a wide range of other software components, whether open source or closed source, and all of it can break for one reason or another.

When CrowdStrike and left-pad broke, many of the users suffering through sudden breakages didn’t even know what CrowdStrike and left-pad were. No wonder the world feels fragile.

4. Legacy tech aging in place

Sometimes, the best developers can develop a blinkered perspective because the technology they’re working on and are most aware of is on the cutting edge. When you’re pushing that edge every day, it can crowd out your perspective.

But the rest of the world is often far behind the edge. When we talk about software fragility, we can make a big mistake focusing on modern software practices because many important systems rely on legacy technology.

No better example of this is the stubbornness of COBOL. Much of the code that supports banking software is still in use three decades after it was originally built. Wealthsimple even interviewed one of the coders who built some of that software back in the day, and at 73, he still gets requests to fix and update the software.

Again, a tradeoff: Certainly, banks with software are better than ones without. However, when well-intentioned banks built COBOL-based systems back in the 1960s, they couldn’t have anticipated that one day, the language would fade from use and that the systems they built would go unmaintained and unreplaced.

The United States government often uses similarly antiquated systems, and the most dramatic example of this legacy tech in action (or inaction) happened in 2020. When millions lost their jobs and tried to get unemployment benefits due to the COVID-19 pandemic, nearly 20 states suffered payment delays because of legacy systems.

The frailty of these legacy systems got to the point where New Jersey Governor Phil Murphy pleaded with the public, begging for programmers who could handle COBOL.

In the postmortems after the fact, he said, we’d all be asking, “How the heck did we get here when we literally needed cobalt [sic] programmers?"

It all brings to mind a well-known quote from legendary programmer Ellen Ullman: “We build our computer systems the way we build our cities: over time, without a plan, on top of ruins.”

Can we build better software in better ways? Yes. But until that magical, maybe unrealistic day, we’ll only have more layers of legacy tech that make the world feel more fragile with each layer added.

5. Intervention-resistant software

When companies add software to a product, they inadvertently create a supply/demand problem. Software is difficult to fix and understand for non-developers, so the more software there is, the more likely people will run into issues that domain experts can’t intervene or fix.

Many car mechanics, for example, have struggled to learn how to handle manufacturer-specific software issues now that nearly all cars are essentially smart devices. Manufacturers have had to outsource much of their software features to companies that don’t normally deal with the rigor of life-and-death situations.

“Established carmakers have been forced to cede valuable dashboard real estate to Silicon Valley while remaining the target of consumer ire — and class-action lawsuits — when something goes wrong,” writes Jack Ewing, a business writer for the New York Times.

Here, numerous issues start to collide: Much of the software is feature creep (#1), the upgrade cycle can be onerous because it takes longer to manufacture a new car than a new smartphone (#2), and much of this software will soon become legacy tech (#3).

Ewing writes, “As established carmakers pack vehicles with more and more technology, flawed software seems likely to continue to generate lawsuits.”

Part of the reason these lawsuits remain likely is that software can make devices harder to repair when they break, and make it harder for even skilled users to intervene with broken or failing devices.

There’s likely no better example of this than Ethiopian Airlines Flight 302, which dove at full speed into a field six minutes after takeoff – killing everyone on board. The plane that crashed was a Boeing 737 MAX 8, and investigators discovered the cause was new flight control software (called MCAS) that could, unbeknownst to the pilots, force the plane into a nosedive.

Gregory Travis, a software developer and pilot, analyzed the software issues in a long-form article in IEEE. The central problem, as Travis writes, is that “Boeing’s solution to its hardware problem was software.” Boeing wanted to market a new plane as being more or less the same as its previous models, and they hid a software feature – MCAS – to do so.

Without getting too deep in the weeds, the core issue was that the software refused oversight. As Travis writes, in normal circumstances, pilots can cross-check faulty machines and readings against each other to figure out what’s going on. But in this case, the iron-clad software logic couldn’t do the same and directed the plane as if it weren’t nose-diving – even though it was.

(Source)

“In a pinch, a human pilot could just look out the windshield to confirm visually and directly that, no, the aircraft is not pitched up dangerously,” Travis writes. “That’s the ultimate check and should go directly to the pilot’s ultimate sovereignty. Unfortunately, the current implementation of MCAS denies that sovereignty. It denies the pilots the ability to respond to what’s before their own eyes.”

Ethiopian aviation journalist Kayeyesus Bekele found, by listening to the recordings, that the pilots were fighting the software: “What was heard on the cockpit voice recorder, the first officer saying, ‘Pitch up! Pitch up! Pitch up!’ while the MCAS was kicking and nosediving the aircraft [...] But it was so difficult for them to keep the aircraft under control, so finally, it nosedived near Bishoftu town.”

Numerous lawsuits followed the crash, and Boeing's CEO stepped down. Among the many lessons of this disaster is that software introduced a bug the pilots couldn’t see and a flawed course that they couldn’t intervene on and change.

Crossing the chasm between bad and good

Over the years, companies have underestimated just how good software has to be to make the tradeoff between additional complexity and fragility worthwhile. Software is inherently complex, so the addition of software always means the addition of complexity, which often means fragility and failure.

This doesn’t mean software developers' work is bad. If anything, end-users can quickly (and almost unfairly) grow acclimated to the access that software gives them but feel frustration when that access breaks—frustration that doesn’t always account for the high 9s normally on offer.

However, in an industry that’s long prioritized the MVP model and iteration over time, quality can too often be an afterthought, and the consequences of good-but-not-great software can be completely underestimated.

With decades of software development behind us and decades of seeing software deployed, upgraded, replaced, stuck, and rotten, the industry has to take a clearer look at the kind of quality necessary to make software—and the world that increasingly depends on it—reliable.

When we see these standards, we can more often decide that a fridge doesn’t actually need an AI assistant.