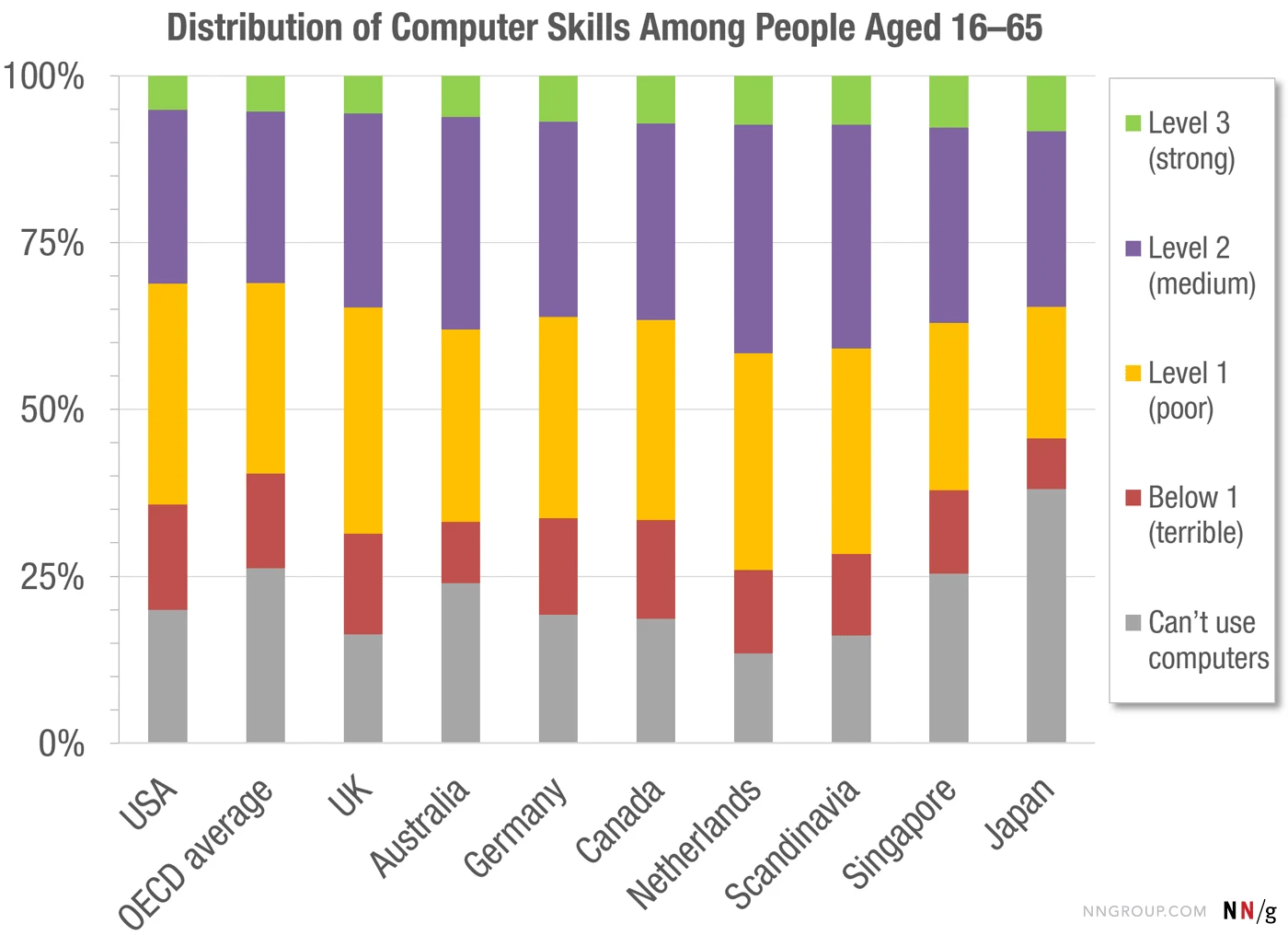

You, dear reader, are one of the greatest computer users in the entire world.

I don’t know you, but I can say that with confidence. You might not be as confident, but I can test you: Could you schedule a meeting room via a scheduling app using information spread across several emails in your inbox?

If you can, congratulations! According to a study of 215,942 people across 33 countries, you can use a computer better than 92-95% of adults worldwide (Level 3 in the graph below).

Image: nngroup.com

Isn’t it strange, then, that it’s a pain to get your printer to work; that you can go hoarse convincing your smart devices to listen to you; that you’re troubleshooting software at least a little bit almost every day? How can you be among the best computer users in the world if so much software breaks on you so often?

Grumbling about “the state of software these days” seems like a near-daily complaint. Every once in a while, someone will write a really in-depth critique of some particular software and rack up some votes on Hacker News. But software doesn’t seem to be getting any better.

Marc Andreessen told us software was eating the world back in 2011, but we weren’t warned about how buggy the world would be afterward. Developers and end-users alike feel this, albeit differently, and the result is a gradual erosion of trust in software and technology.

To spoil the article up-front: It’s not you. It’s software. But the interesting part is how we got here and what, if anything, we can do about it.

Has software always been this broken?

The history of software, broken software, bugs, and complaints about all three date back long before software ate anything. If there’s a more recent, potentially more consequential change, it’s the growing willingness people have to argue the problem does not, in fact, exist between keyboard and chair.

A short timeline of developers complaining about brokenness

Complaints about software are common, but the most popular source is often software makers. You don’t need to look hard to find them.

Kelsey Hightower, formerly a distinguished engineer at Google, is one of many developers who would prefer that products have less software involved.

In 2020, Soren Bjornstad, a software engineer at RemNote, wrote Everything’s Broken, Everything’s Too Complicated. In it, he calls out Andreessen, too, writing:

“Everything now relies on software — as Marc Andreessen famously said in 2011, ‘software is eating the world.’ And software almost never works right, at least not software complex enough to eat the world.”

Like many articles in this genre, Bjornstad was inspired by a shockingly bad experience made all the more shocking by his extensive experience building software. In short, he was trying to load a Microsoft Azure certification test, and a bevy of frustrating, obscure problems nearly made him late to the test.

“I’m known for being unusually quick on my technical feet, and dealing with this kind of crap is literally my full-time job as a systems developer and DevOps engineer, and I almost missed the exam,” he continues. “What in tarnation are other people supposed to do?”

In 2012, Scott Hanselman wrote Everything's broken and nobody's upset. In another staple of the “developer complains about software” genre, Hanselman uses his experience to point out just how bad things are, writing, “I work for Microsoft, have my personal life in Google, use Apple devices to access it and it all sucks.”

Requisite of the genre, he also includes a bulleted list of issues, capping them off by writing, “All of this happened with a single week of actual work. There are likely a hundred more issues like this. Truly, it's death by a thousand paper cuts.”

In 2007, Jeff Atwood, co-founder of StackOverflow, wrote Why does software spoil? Nearly two decades ago, Atwood wrote about a pattern that has only become worse since: “For some software packages, something goes terribly, horribly wrong during the process of natural upgrade evolution. Instead of becoming better applications over time, they become worse. They end up more bloated, more slow, more complex, more painful to use.”

Atwood articulates an emotional complaint that’s often more implicit in other posts, writing, “If this spoilage goes on long enough, eventually you begin to loathe and fear the upgrade process. And that strikes me as profoundly sad, because it rips the heart out of the essential enjoyment of software engineering. We write software. If we inevitably end up making software worse, then why are we bothering? What are we doing wrong?”

Unfortunately for Atwood, we can trace an unsatisfying answer back a few more decades. In 1975, Fred Brooks, author of The Mythical Man-Month, wrote, “Building software will always be hard. There is inherently no silver bullet.” The fact that building software has remained hard through the decades likely isn’t surprising, but the fact that overall software quality hasn’t risen in parallel with, say, storage and processor speed is.

Software quality and performance don’t progress linearly

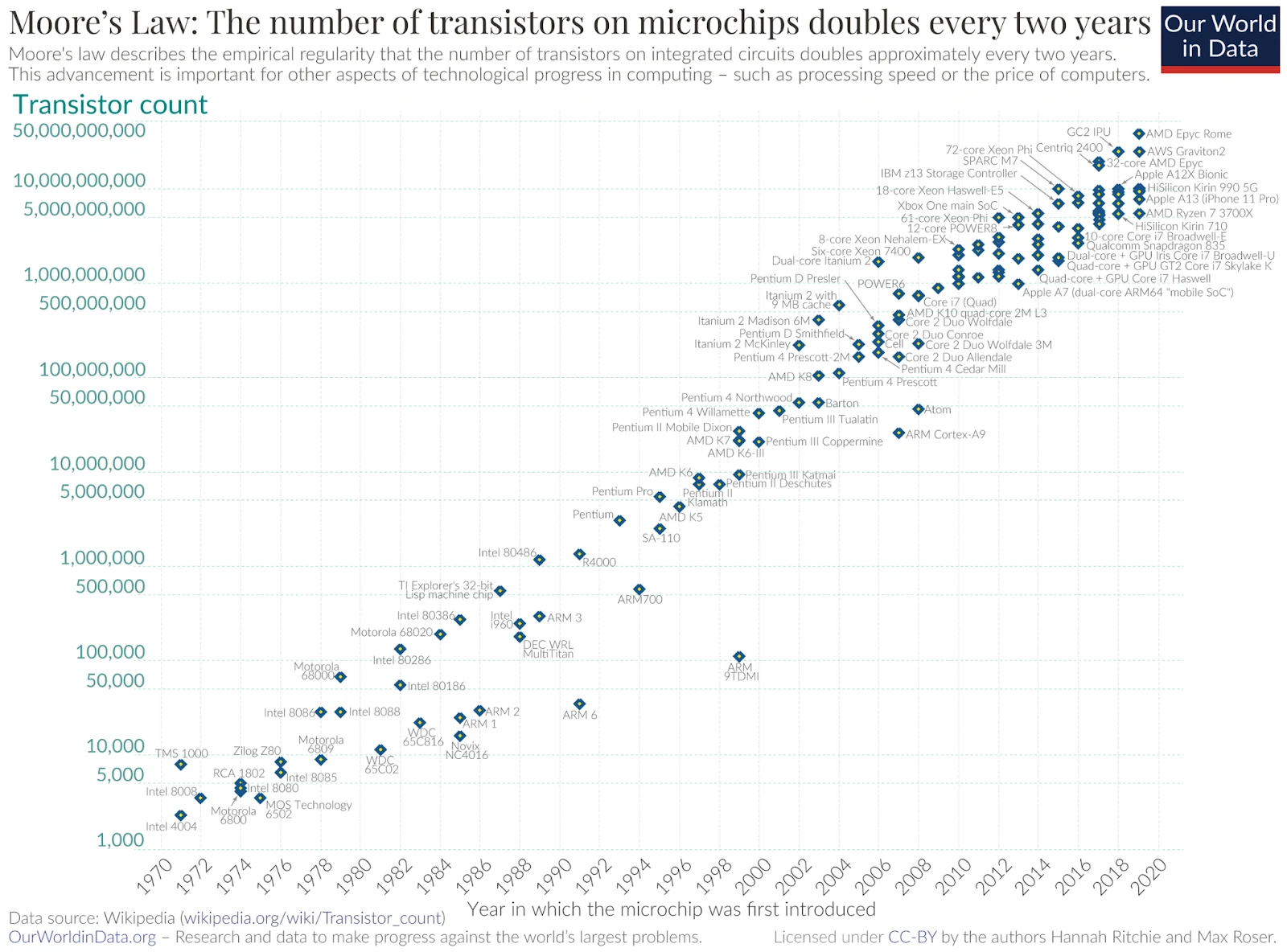

Dave Karpf, an Associate Professor in the School of Media and Public Affairs at George Washington University, once wrote, “The most essential element of the Silicon Valley ideology is its collective faith in technological acceleration” and that “Silicon Valley is the church of Moore’s Law.”

This point leads to some natural criticism, but it’s worth focusing on two simple things: Moore’s Law is basically true, but it’s surprising where the acceleration pattern fails.

First, Moore’s Law has held true for decades. Though this pattern might not last forever (Nvidia CEO Jensen Huang argues it’s dead), it’s remarkable just how true it’s been for how long. It’s similarly remarkable to see how other technological advances, such as storage space, have kept up a similar pace.

Second: Despite this steady advancement, software quality hasn’t always kept up a parallel pace.

At first glance, we’d expect that despite short-term dips, things would continue improving over time. But research shows otherwise.

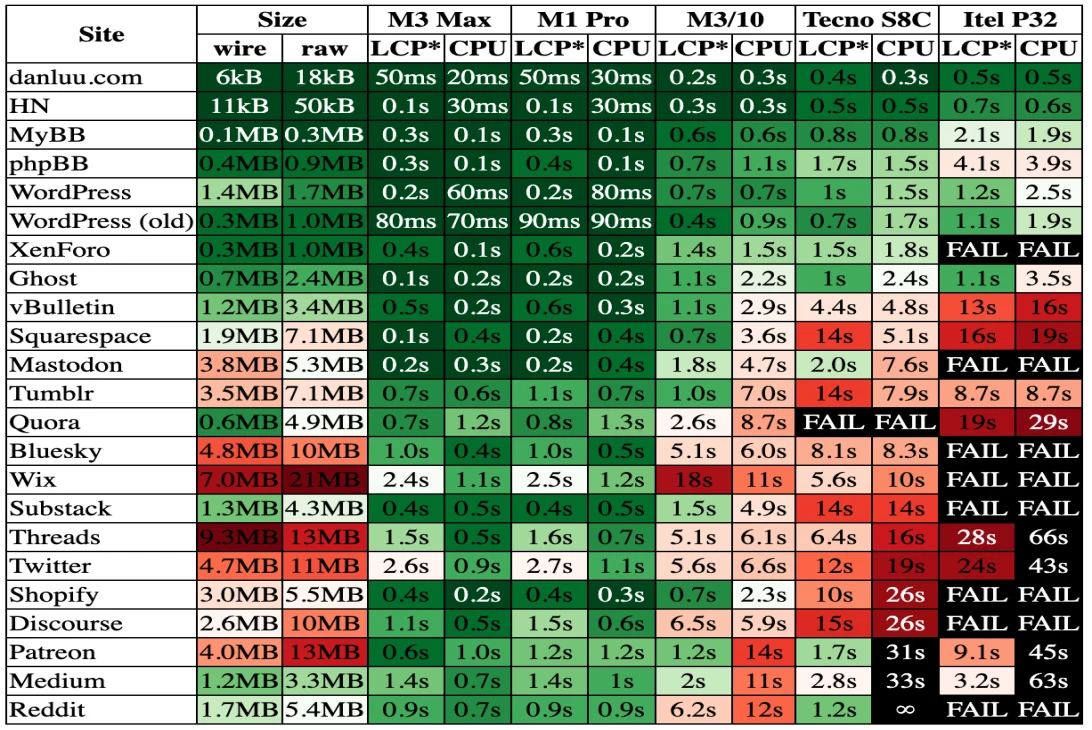

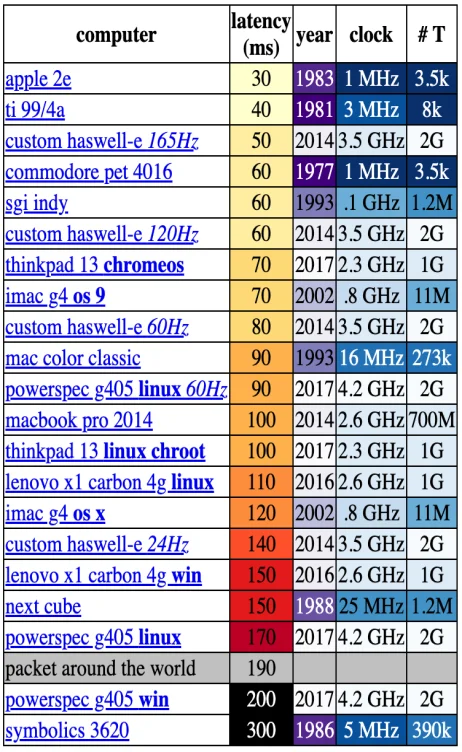

Dan Luu, for example, shows that “the older sites are, in general, faster than the newer ones, with sites that (visually) look like they haven't been updated in a decade or two tending to be among the fastest.”

In another post, Luu shows, “Almost every computer and mobile device that people buy today is slower than common models of computers from the 70s and 80s.”

It’s not just websites and hardware. Even IDEs, where you’d think developers would be at their most perfectionistic, have degraded.

As Julio Merino, a senior software engineer at Snowflake, writes, “Even though we do have powerful console editors these days, they don’t quite offer the same usable experience we had 30 years ago. In fact, it feels like during these 30 years, we regressed in many ways, and only now are reaching feature parity with some of the features we used to have.”

This isn’t to say everything is worse than it once was. When you look closely, though, progress is not assured, and improvements in one direction often mean degradation in another. We can’t count on software and technology just getting better, and the myth of progress increasingly feels hollow.

End-users and enshittification

In 2023, Cory Doctoro’s term “enshittification” became the word of the year. Enshittification is a specific term targeting tech platforms, an explanation trying to plot out the way platforms become successful and then worsen over time in order to extract more profit. The term has achieved the popularity it has because it taps into an even wider sentiment: Users increasingly expect to be disappointed by software.

Right now, we have a generation of digital natives accustomed to using high-speed Internet-connected devices from birth, and we have experts who’ve seen the evolution of software from its earliest days. End-users have used swathes of other software and have many comparison points. They’re not experts nor QA departments, no, but they can’t be dismissed with a “Have you tried turning it off and on again?”

As technology has penetrated through the generations, technological institutions have endured for generations, lending users the experience to land substantial criticism. The chief example of this is Google.

Users have been complaining about the quality of Google search results, the proliferation of ads, and the dominance of search-optimized content for years. Recently, however, users have gotten some validation: Some research shows that Google really has gotten worse, and some investigators have found evidence of business decisions that might be behind the decline.

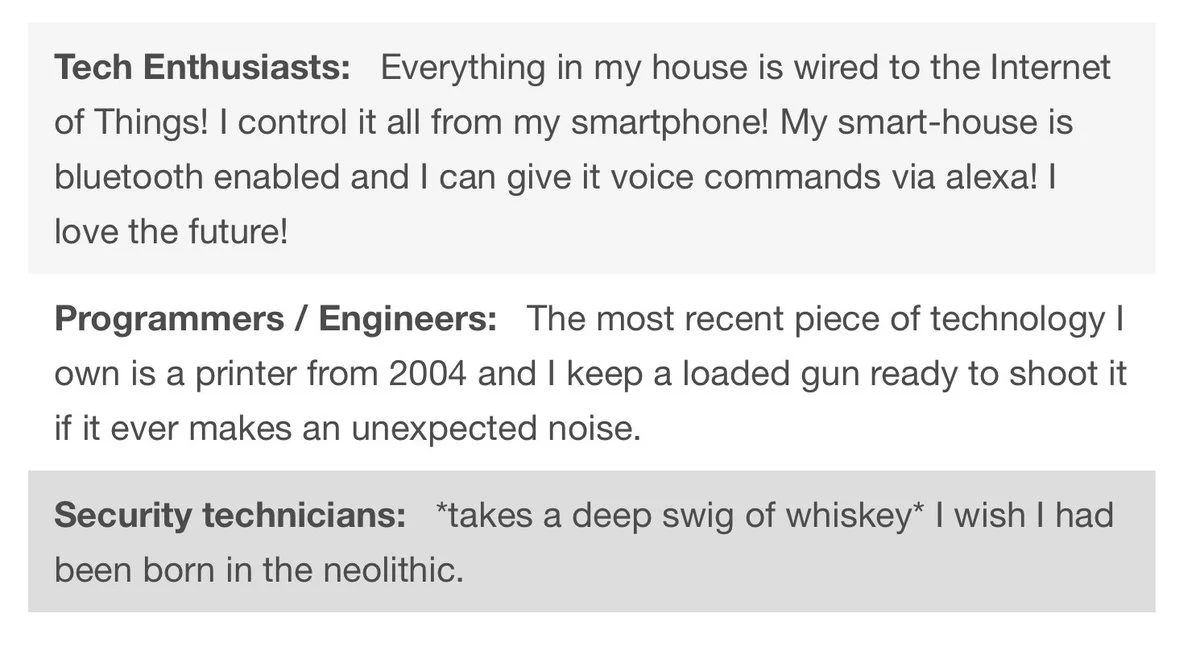

The takeaway, for our purposes, is the seemingly new confidence that non-technical users have to proclaim software broken or degraded. After all, there’s often been a demarcation (immortalized in the meme below) between tech enthusiasts and end-users vs. programmers, engineers, and technical experts.

Now, however, a common developer complaint has reached the masses, and it feels like the secret is out.

Software engineers are not, really, engineers

Don’t rush to Twitter – that’s neither an insult nor a compliment.

Over the decades, we’ve used a variety of metaphors to describe software development (e.g., software architecture, lean development, software engineering), but it’s really something else entirely. As a result, when we talk about broken software, we also have to talk about the false expectations we set implicitly and explicitly, privately and publicly.

Software is more complex than anything humanity has ever built

The Manchester Baby, the first electronic stored-program computer, ran its first program in 1948, and in the intervening decades, we’ve never fully grappled with how complex software development is. There’s a real case to be made that software is more complex than anything humanity has ever built.

In the past, we’ve relied on metaphors to help explain software and the work of building it, but in the process, we’ve lost sight of just how complex software is. No other industry tasks individuals with working across this much complexity and resisting this much fragility. The more we understand this, the more we can understand the scope of the challenge to create “high-quality software.”

Here, it’s best to turn back to some of the field’s pioneering thinkers. Back in the late 1980s, software was long past the Manchester Baby but still well before becoming one of the world’s most sought-after career paths. Technologists were reckoning with progress so far and progress yet to come.

In the essay “On the cruelty of really teaching computing science,” published in 1988, Edsger W. Dijkstra writes, “The programmer is in the unique position that his is the only discipline and profession in which such a gigantic ratio, which totally baffles our imagination, has to be bridged by a single technology.” You can see echoes of this idea in memes alluding to the miraculousness of computers.

Apparently, it’s cruel to teach computer science to humans and to rocks.

In the same essay, Dijkstra also writes that programmers have to be able to “think in terms of conceptual hierarchies that are much deeper than a single mind ever needed to face before.” The computer, Dijkstra continues, “Confronts us with a radically new intellectual challenge that has no precedent in our history.”

Decades later, we’re still trying to make new metaphors and squash old ones. Martin Fowler, for example, writes that many people “liken building software to constructing cathedrals or skyscrapers.” But it’s a bad comparison. Building software, he writes, “exists in a world of uncertainty unknown to the physical world.” Software customers barely know what they want, and the tools developers use to fulfill their requests (libraries, languages, platforms, etc.) change every few years.

Here, the breakdown of the comparison is actually helpful. “The equivalent in the physical world,” Fowler writes, “would be that customers usually add new floors and change the floor-plan once half the building is built and occupied, while the fundamental properties of concrete change every other year.”

One cause of continual brokenness is that software development is just really complex and difficult. Comparisons to other fields are inevitably inaccurate because already complex software work is constantly changing. Perhaps expecting anything but broken software is foolish.

Software issues are super-salient

Despite the complexity above, users are unlikely to give software the space to break. The nature of software – and the nature of how we use it – tends to make software issues salient and unavoidable.

The real world can absorb failure much more easily than the digital one, so the salience of errors tends to be different. When a driver runs over a pothole on the road, for example, they will likely blame the weather as a source of the problem and the lack of upkeep to explain why the problem remains. When a user encounters a bug in an app, they blame the app or the company behind it.

The developers working on self-driving cars encounter this the most harshly. According to third-party research, 7.14 million fully autonomous miles driven by Waymo cars led to an 85% lower crash rate compared to human drivers. But how much does that matter when self-driving car crashes, rare though they may be, are more salient than crashes from human drivers?

As DHH, co-founder of 37Signals, writes, “Being as good as a human isn’t good enough for a robot. They need to be computer good. That is, virtually perfect. That’s a tough bar to scale.” Unfortunately for the rest of us, even in much less deadly circumstances, the expectation to be “computer good” remains.

Software degrades invisibly but breaks visibly

Many modern (“modern”) technology systems run on decades-old technology — support for which is sometimes hard to find. No better example exists than COBOL, a nearly 70-year-old language that nonetheless handles 3 trillion dollars worth of financial transactions every day.

As Marianne Bellotti, author of Kill It with Fire: Manage Aging Computer Systems (and Future Proof Modern Ones), writes, “We are reaching a tipping point with legacy systems. The generation that built the oldest of them is gradually dying off, and we are piling more and more layers of society on top of these old, largely undocumented and unmaintained computer programs.”

The complexity and rate of change Fowler wrote about then results in yet more complexity and fragility: We have to repair as much or more than we build.

That degradation is invisible to users, though, until it results in breakage. Then, unlike a wobbly table that can still be used or an old car that can be traded in, the brokenness becomes hyper-visible until it’s fixed.

A similar pattern applies to bugs: Every Leap Day, various software systems around the world fall apart despite the relative predictability of the event. The software is working until one day, it very much isn’t.

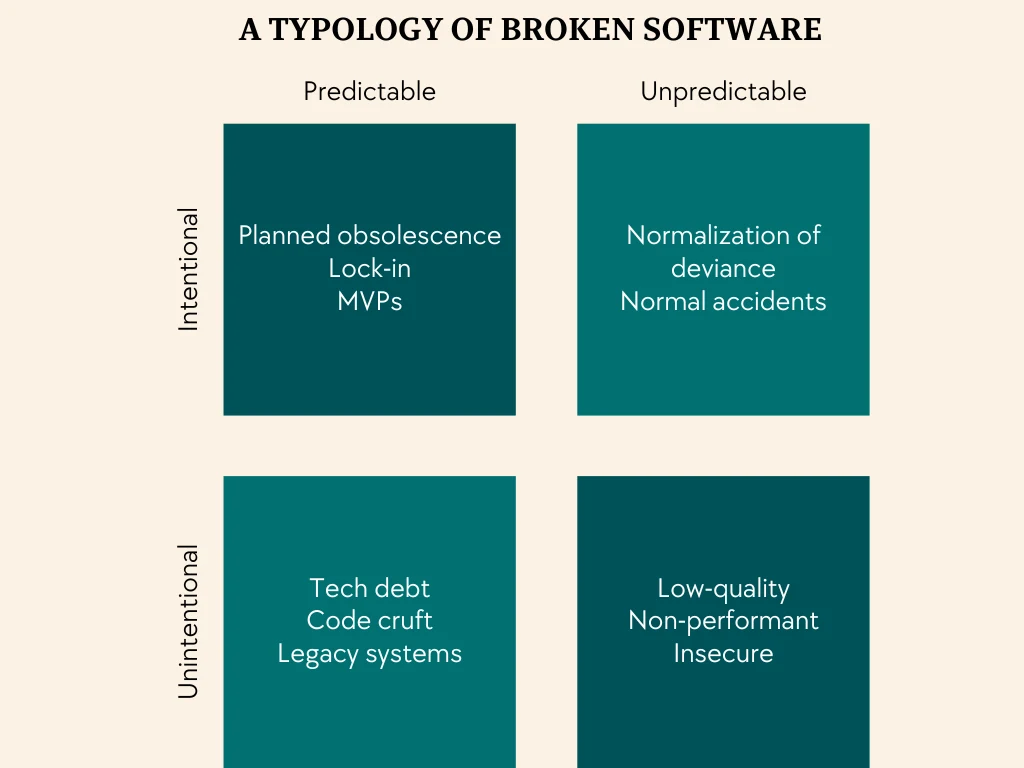

A typology of brokenness

There are many ways to discuss and complain about broken and low-quality software, but two factors are most important to advance these conversations: Was the breakage intentional? And was it predictable? The more intentional and predictable, the more we can talk about the humans involved, and the more unintentional and unpredictable, the more we can talk about the inherent complexity of building software.

Intentional and predictable

This type of software breakage occurs when companies intentionally produce software that’s broken on delivery or they produce software they could predict would break.

Examples include:

Planned obsolescence: When companies design products to degrade or break sooner than they need to.

Lock-in: When companies design software that locks users into a relationship with them (by not allowing them to export data, for example) or when they build in a lack of interoperability (e.g., messaging between iPhones and Androids).

MVPs: When startups build and debut products that work to some degree but are faulty or deficient in most, if not all, other categories.

The decision to intentionally build predictably broken software can actually be a good one or a bad one, depending on whose interests you’re prioritizing. Planned obsolescence is great for shareholders but bad for users, and MVPs are a great way to start building software as long as the deficiencies are filled out over time.

Intentional and unpredictable

This type of software breakage occurs when companies intentionally produce software that will break (or intentionally use development practices that lead to software that will break) even though they can’t predict how and when it will break.

Examples include:

Normalization of deviance: When companies normalize taking shortcuts and reinforce poor programming practices. As Dan Luu writes, “We will rationalize that taking shortcuts is the right, reasonable thing to do,” and eventually, as companies choose to normalize deviance, unpredictable breakages will result.

Normal accidents: When companies build systems that are inherently prone to failure despite not being able to predict how they’ll fail. A key example of this, as Marianne Bellotti writes, is tight coupling. “In tightly coupled situations,” she writes, “There’s a high probability that changes with one component will affect the other [...] Tightly coupled systems produce cascading effects.”

Again, there’s a good/bad valence here that you can’t determine from the outside in. Startups often benefit from tightly coupled monoliths, for example, but benefit from migrating to microservices once they reach a certain scale. A breakage might be “intentional,” but it doesn’t mean anyone did anything wrong.

Unintentional and predictable

This type of software breakage occurs when companies unintentionally produce or retain software that they nevertheless could have predicted would break.

Examples include:

Tech debt and code cruft: When companies encourage speed over quality or limit developers from performing repair work, tech debt and code cruft build up. People outside the development team tend not to understand the risk of this until a breakage happens, making this unintentional and, yet predictable.

Legacy systems: When companies maintain systems that fall further and further out of date, maintenance tends to be harder and integrations more difficult to maintain. Legacy systems are often quite noticeable to people internally, making their inevitable failures predictable, but the intention is typically to keep things working as long as necessary before a migration is possible.

Here, technological and business needs cross and sometimes conflict. For example, no one cares more about tech debt than developers, but it often takes a lot of political capital to convince higher-ups to let them clear up tech debt.

As Fowler writes, “A user can tell if the user-interface is good. An executive can tell if the software is making her staff more efficient at their work. Users and customers will notice defects, particularly should they corrupt data or render the system inoperative for a while. But customers and users cannot perceive the architecture of the software.”

The less visible quality is, especially to users and the people in charge, the harder it will be to fix. No one in the business intends the inevitable results, but the developers typically have a cool “I told you so” ready-to-go.

Unintentional and unpredictable

This type of software breakage is the result of developers building software that just doesn’t work well or doesn’t work well in the face of adversaries.

Examples include:

Software that isn’t performant: Here, companies might not encourage developers to prioritize performance, or developers themselves might not know how to build very performant software. Nelson Elhage, an engineer and researcher at Anthropic, writes, for example, that performance is “understood intellectually” but that it’s “rarely appreciated on a really visceral level, and also is often given lip service more so than real investment.”

Defeated: When companies build software that, particular quality and security practices aside, is defeated by adversaries, such as hackers and spammers. Again, neither the business nor the developers really plan to build a product vulnerable to DDoS attacks, but a 404 is a 404.

When we call this type unintentional, there’s some room for negligence. A developer could make a given component perform better, for example, or find ways to make it more secure, but internal or external deadlines and priorities might encourage otherwise. As we’ve emphasized, development is a complex, wicked problem, so broken software might just need iteration and feedback.

It’s time to build trust

Over the years, the technology industry has taken so many shortcuts that trust itself has become a form of tech debt.

A 2023 study shows, for example, that trust in the technology industry is plummeting: Almost ¼ of the public considers the technology industry less trustworthy than the average industry – only beaten by industries like health insurance and banking.

Evan Armstrong, a technology writer, clarifies the irony by writing, “The industry of the future has lost the trust of the present.” The reasons for this are many, extending up to big problems like antitrust and the “techlash” and down to more mundane problems like, as we’ve talked about here, broken and faulty software.

With AI, the possibility of breakage rises – amplifying people’s already existing anxieties about technology. What might have been a bug in a previous era of software is now a hallucination – something we’re supposed to expect and accept.

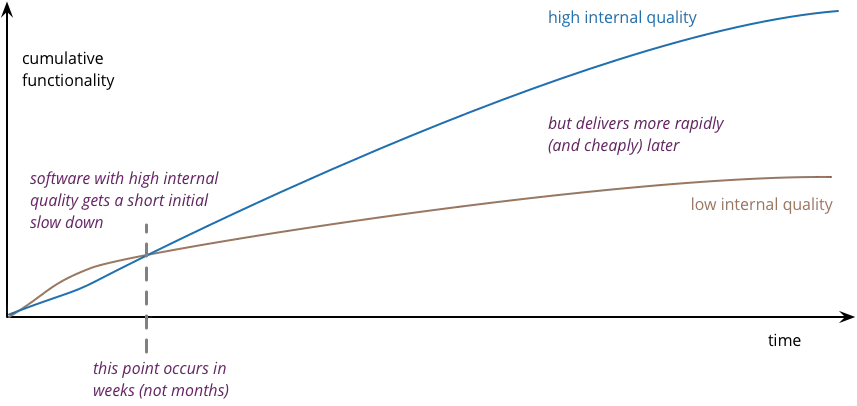

Only with an industry-wide framework shift can we start to produce better software. This framework will only emerge when we reckon with how hard it is to build and maintain software. Martin Fowler articulates the issue as a tension between internal and external quality.

Everyone cares about external quality to some degree, but we can’t advocate for internal quality until, he writes, we can show how it “lowers the cost of future change” despite “a period where the low internal quality is more productive than the high track.”

In a way, the entire discussion of software quality hinges on where the lines in the diagram above cross: How well do we need to build, and for how long, until high internal quality pays off? The question comes down to trust: If we want users to trust us again, then we need to advocate for a level of trust that allows us to build high-quality software despite the initial cost in time.

Software will still break, of course, but we can start to shift away from brokenness being the default.