In Part 1 I made the case for trying saucectl, and explained how it solves a long-standing problem and contributes to improved team dynamics.

In Part 2 I'll dive into some details, and share some tips and some lessons learned. I'll end by addressing a common objection I can anticipate.

A Different Test Design

This and other large changes in test execution will impact test design. Don't expect to simply copy and paste existing tests. The more likely outcome is that you'll want to refactor some of your tests to run well in this new paradigm.

Sight Unseen

The tests and suites you run in saucectl won't run locally. They'll begin running in the Sauce Labs cloud. Browser windows and mobile device windows will not appear on your desktop. You won't immediately see anything happening.

This changes things. For instance, you may come to appreciate logging more than you did before. When a suite of tests is running, especially a larger and longer-running suite, you may want console output in that command prompt as the suite is running. It could be simple, but helpful:

1config page check2our test PASS here; begin cleanup3logout success from merchant; END TEST

As a test engineer who runs your tests regularly, you likely already know where your current logging hits the mark (or falls short).

As a thought experiment, imagine the first 20 tests that you are going to run tomorrow morning. Now imagine launching them via one command in a terminal, something like this:

1saucectl run playwright -c .sauce/my_regression_suite.config.yml

As those churn away in the background – they're not tying up your machine as they run – you move on to other work, perhaps running more and different tests locally. In any case, you glance over that terminal occasionally, to see how your tests are doing. What, if anything, would you want to see there? What sort of logging would be helpful?

Parallel Plays

I have a few tests which must run sequentially. I know in advance what those are, and I have a good plan as to when and how to run them. It's OK.

Most of the time, I want most of my tests to run multithreaded. In my case, this can massively reduce time to result for some suites. A few of my suites appear to run especially well in parallel.

This requires atomic test design, with no dependencies on execution order. As you run more tests – and more tests together – you will favor a more atomic test design pattern.

I have gladly accepted this tradeoff. Admittedly, I have a higher file count. In this repo now, there lives a higher number of smaller, independent, idempotent, reliable tests. Without context, a reviewer on my PR might object, understandably: hey, these four tests are similar, and you could have put them all into one file.

Yes, I could have. But I want these tests to run reliably, and I want them to run fast. And I want them to "play nice together" in suites. And, of course, I want them to run reliably over time, for days in a row. This is how it's done. Some tradeoffs are worth making.

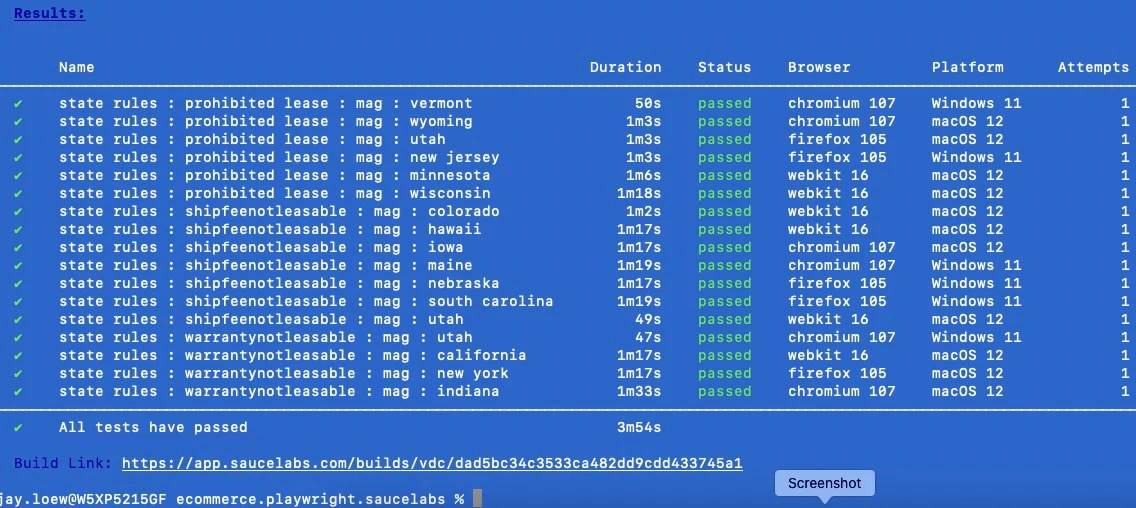

With a little bit of work, you can achieve some big wins in parallel test execution. On a good day, I can even have multiple terminals up, with suites spawned from each, and a finished product looking like this:

Your Sauce Labs Dashboard

In this paradigm, you won't normally watch a test run. But you certainly could. Your Sauce Labs dashboard allows you to both watch a test in progress, and view a replay. A moment after a test finishes, video and logs are available for your inspection.

There is more. For instance, you can also abort a currently running test, delete results, and view failure analysis over time. (The failure analysis of course requires some run history.) There is more than I'll cover here, but let's have a look at the basic goodies:

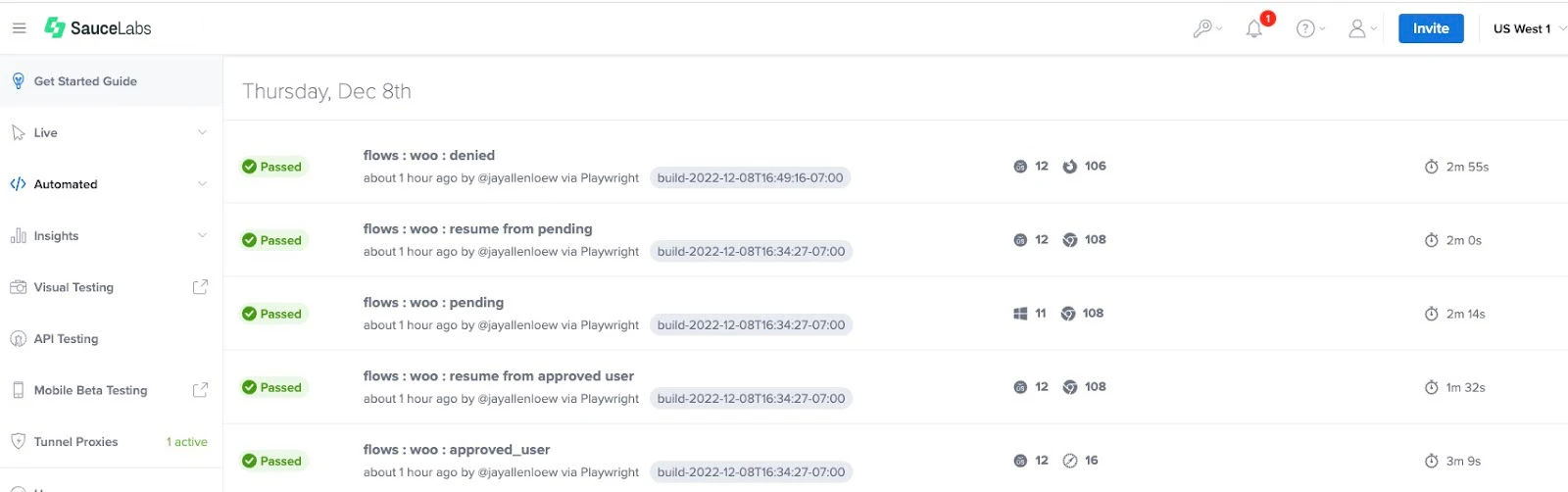

Here is the top of my dashboard, clipped off, showing the first 5 tests of about 200 tests that I ran today. At a glance, I can see that these longer-running tests have passed, and in which browsers, and how long they took.

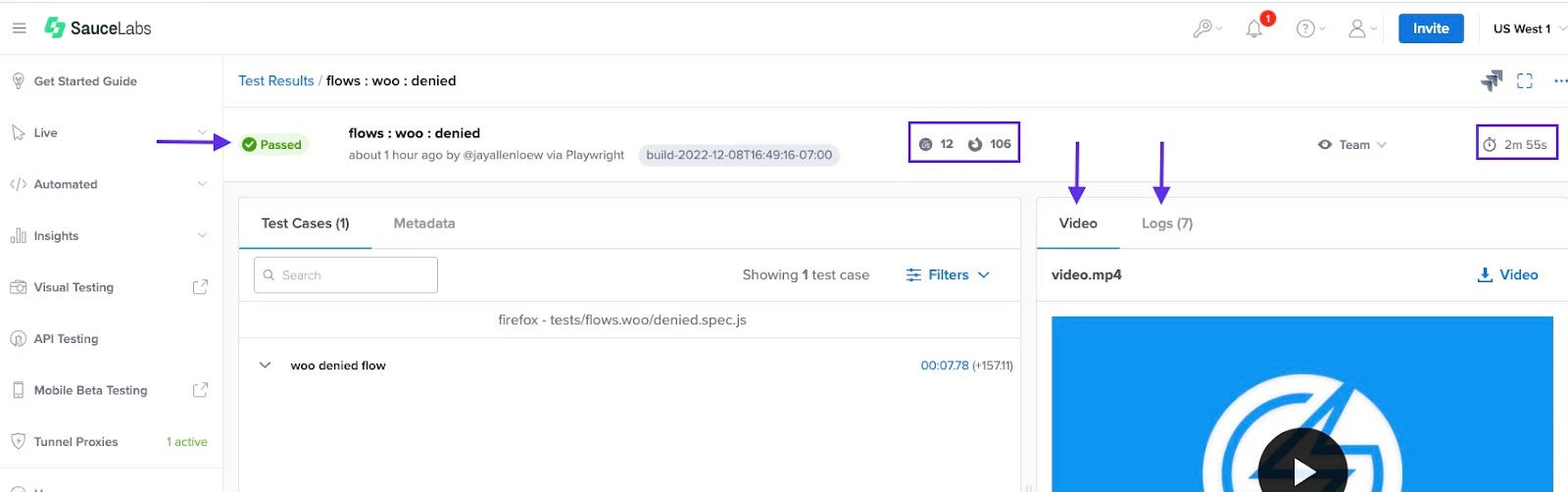

Clicking on the first one there, I drill down for more:

Here I can view the metadata for the test. I don't often need it, but it can be helpful during troubleshooting.

I can click that video and actually watch the playback.

And I can view a number of different logs. In this case, 7 different logs were captured. I typically care about 2 of them when all is well.

Again, I can view which OS-browser combination the test ran in, and see how long it took. And if I wish, I can delete the test artifact altogether.

Inconvenient Truths

There is one thing saucectl did that I didn't like, but that had to be done: it confirmed, once and for all, that my primary test environment is anemic. It has not been right-sized. I suspected this long before the spike in test execution that saucectl has enabled. Today, there is no denying it: our test environment is not keeping up with us. My employer will soon have some decisions to make. Will we invest in test infrastructure or not? How serious are we, really, about things like continuous integration, and streamlined deployment? We are lagging behind the industry generally, and we are way behind where we need to be, even for the roadmap for the next couple of quarters.

Sauce Labs can scale up to heights we are not likely to require any time soon. Sauce Labs will not hold us back.

My team writes efficient, accurate, dependable tests. My team will not hold us back.

Already, we are maxing out our primary test environment, and we are just getting started. That is the one thing that could potentially hold us back.

In addition to capacity, you must consider test data. Does your employer already have a proper TDM solution in place? This too is a potential roadblock. Atomic, idempotent tests typically require semi-real, available, scalable test data. In other words, if a form under test requires an address, you probably already know this: you can't just enter 123 Main St over and over and expect it to work. Garbage in garbage out. My tests won't pass if they programmatically fill in random, simple nonsense.

Furthermore, I can't over-rely on fake data generators. Some of my tests require data that looks a lot more like production (actual places, real dates, realistic amounts, and so forth).

Objections

In closing, I will now answer an objection I can anticipate and have already heard: Your tests should be running in Jenkins.

First, we should embrace a software development principle: separation of concerns. If you are using Jenkins as a build tool, very well. Personally, I think you'd do well to consider modern-day alternatives. But in the end, there is nothing inherently wrong with using Jenkins as designed.

The objection I've personally heard is founded, I suspect, on a misunderstanding, or perhaps on an unrealistic expectation. The desire appears to be for a simple test runner: just give me a button to click, and the tests run.

I would argue that the overhead of Jenkins doesn't justify its existence in your stack, if that's the reason you want it. In that case, it adds no value, and asks too much of you in return. It takes time and effort to get a Jenkins box set up, and those jobs don’t maintain themselves.

Disclaimer. Yes, we still have Jenkins jobs. But we're not creating new ones. And the old ones are on a roadmap, with deprecation dates. Jenkins does not appear anywhere on any diagram of our future architecture.

I do not disrespect Jenkins. It served the industry well for a long time. Alas, all good things come to an end, especially in software. As I am writing this today, Jenkins is certainly on its down slope, after a long and commendable run.

About the Author

Allen Loew is a Principal Quality Engineer at Progressive Leasing LLC, and a Sauce Labs advocate. In support of his current team, he has written a library of Playwright tests in JavaScript and running in Sauce Control. Previously, he wrote most of his tests in Java and Selenium. Allen was an early adopter of Sauce Control and is now all-in, exploring boundaries of efficiency with sharding and multithreaded test execution. Allen has championed Sauce Labs usage at his current and former employers, and has led training efforts for new users. His particular interests in testing include multi-platform coverage, concurrency, and localization.