Ensuring visual consistency across applications is becoming one of the most critical aspects of a quality assurance strategy. Functional validation ensures feature functionality behaves as per customer expectations. However, with this approach, visual defects often go unnoticed until they directly impact end users or stakeholders.

As applications grow in complexity, visual testing is emerging as a crucial validation layer in today’s quality assurance practices. Subtle shifts in layout, alignment inconsistencies, or glitches in styling can be hard for functional tests to identify. While not breaking any features, these visual inconsistencies can chip away at user trust and lead to branding misrepresentations. As applications become more complex, it becomes evident that additional layers of visual validation should be introduced not just as a technical improvement but also as a part of quality transformation.

This article explains how organizations can begin to adopt visual testing. I will cover identifying what is needed, aligning with stakeholders, and integrating into existing quality assurance processes and workflows. Instead of Visual testing is not an isolated effort – it must be approached as a foundational shift in how organizations think about overall quality strategy. I will then examine what kind of decisions teams will need to make early on, potential challenges they may encounter, and practical ways they can begin laying the groundwork for broader adoption.

Why organizations should leverage visual testing

Most organizations are heavily reliant on validating whether the features work as expected. As a result, they often miss visual inconsistencies until the application goes live in production. These issues can range from misaligned buttons to styling inconsistencies across browsers. This can significantly impact user experience and brand perception.

These gaps can be addressed by automating the visual validations of web applications. Adopting visual testing becomes important when:

The application is complex and has many UI pages and visual elements, making manual visual checks challenging.

Teams require a quick and easy way to validate the UI without long-running functional tests.

Validation needs are required across many browser versions and mobile devices.

UI issues are frequently reported in production.

There are strict branding or design standards requirements in production.

Organizations want to improve visual quality confidence in their releases.

Modern frontend development leverages component-based frameworks such as React, Angular, etc. Prebuilt UI components within these frameworks accelerate development times. These reusable components help speed up consistency and adoption. Therefore, it is critical to ensure they are visually validated before widespread usage by the teams. It is also important to ensure that they meet design and product expectations. By integrating visual testing early, teams can proactively take steps and catch visual issues before they become more systemic problems.

Visual testing can also serve as a preventive measure. Proactive organizations understand that as software scales (especially if it relies on microservice architecture), there is a high possibility of visual drift. The sooner visual testing is embedded, the more resilient the UI becomes over time.

How the Pipeline works

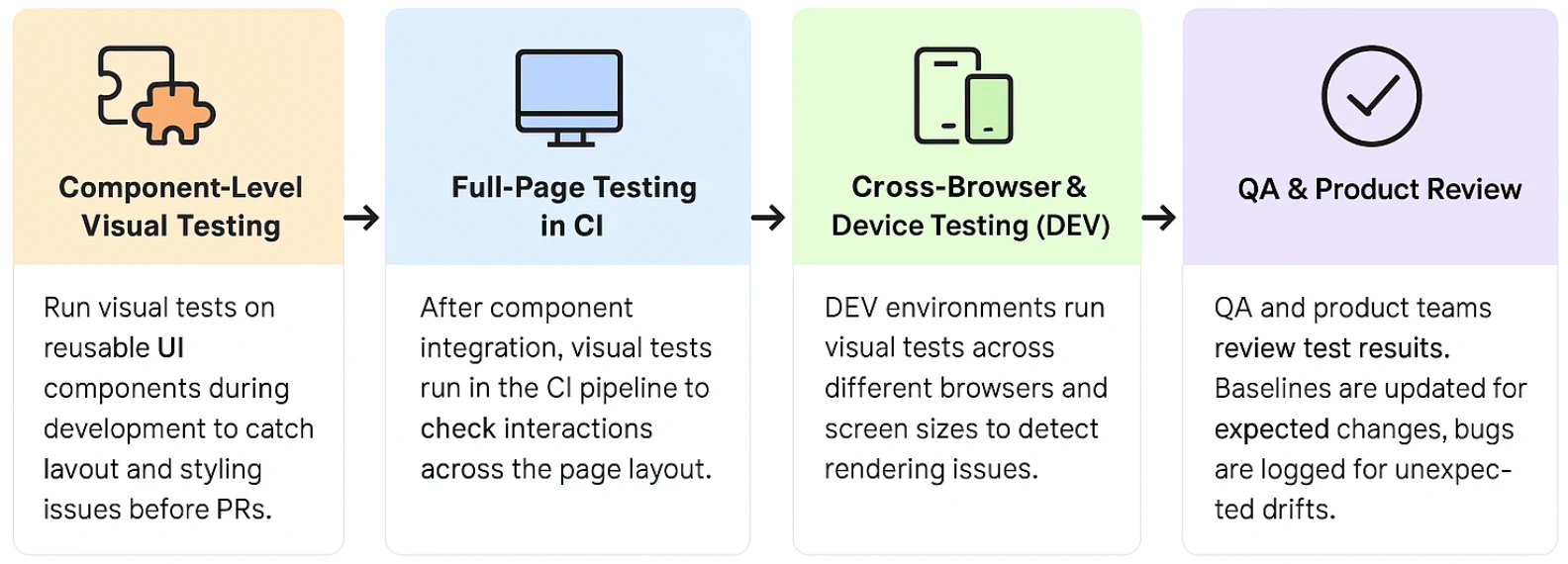

Below is a diagram that depicts how visual testing fits into the enterprise CI/CD workflow:

Visual tests on the component level: The development process for new reusable UI components includes visual tests, which verify design specifications before developers submit their Pull Requests (PRs).

Full-page visual tests in CI: After developers integrate the component into their feature branch, the CI pipeline runs visual tests to verify page-level interactions between UI elements.

Cross-device validation in DEV: The DEV environment receives additional visual tests that run across different browsers and devices to detect rendering problems.

QA and product review: The QA and product teams examine test outcomes. When visual drift occurs because of intentional design modifications, the baseline receives an update. When visual drift occurs without purpose, the system logs a bug.

Organizations should implement visual testing at both component and feature integration levels to identify defects early. This reduces release cycle issues and delivers a consistent user experience with high brand value.

Designing a visual testing strategy

There is much more to visual testing than just the tool itself. A successful visual testing approach starts with a well-defined assurance strategy. Here is a framework that organizations can leverage when adopting visual testing:

1. Identify critical use cases for visual validation

In the early stages of visual testing implementation, it becomes essential to focus on areas where it will deliver the most results. Not every part of the application needs visual validation immediately. Hence, teams must begin by identifying critical user flows. Start with cases that are central to the application's functionality, such as login pages, dashboards, search, etc. These are areas where user experience is affected the most.

Next, prioritize components that are frequently updated or reused across multiple pages. These components are more prone to visual regressions due to ongoing changes and should be visually tested early in isolation using tools such as Storybook or when they are implemented as standalone web components.

Pay attention to any brand-sensitive pages, specifically those used for marketing or customer engagement. Visual consistency is crucial for maintaining trust and reinforcing brand integrity.

Finally, focus on the component integration. Here, validation of how individual components appear and interact when combined on the same page occurs. This helps ensure the consistency of layout and branding across real scenarios.

2. Define visual quality standards

Organizations need to define clear visual quality standards to ensure visual testing delivers consistent results. This begins by setting thresholds for any pixel variations to determine whether a test should pass or fail. These thresholds help filter out insignificant variations, such as minor rendering differences, so that the teams can focus on meaningful regressions.

Establish rules for acceptable changes. It is important to have a well-maintained visual baseline reviewed by both design teams and QA. This serves as a reference point for all future validations. When these standards are set earlier, teams can avoid any unnecessary discussions around visual inconsistencies. This makes visual test results triaging more efficient and objective.

In addition to the baseline alignment and pixel thresholds, handling dynamic content to reduce false positives in visual testing is crucial. Elements such as timestamps and user-specific data can cause inconsistent test results. Ideally, organizations should adopt a static data feed strategy. This helps provide a known and repeatable data state across test runs. This ensures that every visual test execution compares against a stable and predictable baseline, significantly improving reliability and reducing noise.

However, in scenarios where implementing a static data feed is not feasible, the team can consider leveraging "Ignore regions," a feature that excludes dynamic sections of the UI during visual comparison. This can help stabilize noisy visual tests, but needs to be used diligently. Ignore region skills testing of the excluded areas, which means bugs in those areas could go undetected. As such, this needs to be treated as a last resort after all attempts to control test data have been exhausted. The goal here is to strike a balance between reducing flakiness without compromising the overall confidence in visual coverage.

By defining these standards early, teams can reduce unnecessary noise in visual testing and promote a more efficient review process.

3. Selecting the right tooling for visual testing

Selecting the right visual testing tool is critical for successfully integrating visual validation into the quality assurance process. The platform should support cross-browser and responsive testing, providing intelligent means of differentiating meaningful visual changes from the noise.

One of the major capabilities to look for is baseline management. With a strong baseline workflow, teams can version control baselines, approve, and reject changes. When it comes to multiple environments, it is crucial to implement a visual branching strategy in addition to merging approved baselines across branches. This helps avoid redundant review and ensure visual consistency during promotions. Certain critical flows may necessitate the ability to ignore dynamic regions within a page, specifically for timestamps or content that is prone to change.

By choosing a platform that fits the technical needs and process maturity of QA organizations, teams can confidently adapt to visual testing and scale it more efficiently across products.

4. Integrate Visual Tests Gradually

Visual testing is much more effective when introduced in a phased manner. Instead of attempting to cover the whole application upfront, teams should start by identifying high-impact areas and expanding the test coverage incrementally. Start with either component-level tests for reusable web components using tools like Storybook or the top 10-25 high-traffic or high-risk pages/ screens (the “hot spots”) of your application where visual regression is most likely to affect the business outcomes.

By following this approach, teams can see quick wins, refine baselines and fine tune the thresholds without being overwhelmed.

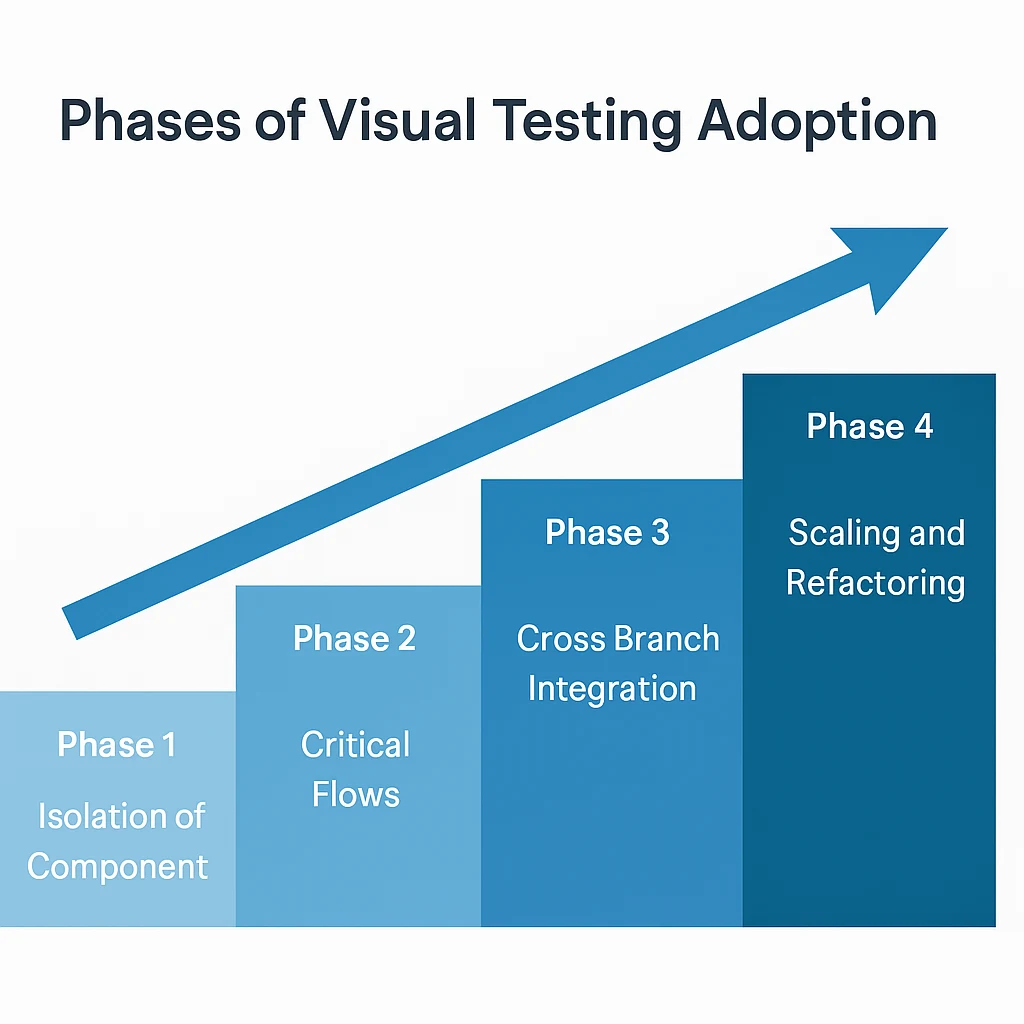

Gradual rollout strategy

Phase 1: Component isolation

Select 3-5 core web components (eg: button, form). Run visual tests in isolation and then set visual baselines. Finally, define pass/fail thresholds.

Phase 2: Critical flows

Apply visual testing to the top 10-25 key screens or flows. Integrate tests into CI for feature branches. Track the drift and involve product teams in initial baseline reviews.

Phase 3: Cross-branch integration

Implement visual regression checks in Dev, QA, and staging environments. Use branch strategies to merge and promote baseline across environments.

Phase 4: Scaling and refactoring

Expand this approach to additional pages, components. Fine tune the thresholds, ignore regions and enhance overall ownership. Gradually, as developers and testers become more familiar with visual testing workflow, scaling becomes seamless while maintaining consistent quality across environments.

Phase 5: Align visual testing with broader goals

To maximize the impact of visual testing, it needs to be well aligned with the organization's broader quality assurance goals. It must not be treated as a standalone practice. It should go hand in hand with functional testing by validating not just how the features work, but also how they look to the users. Visual testing must be aligned with standards in design and product. This helps maintain a consistent user interface. It is important to collaborate between QA, development, and product teams ensuring that visual changes are reviewed and validated with a common understanding of quality. When organizations make visual testing a part of their overall assurance strategy, they can deliver releases that are reliable and of high overall quality.

Building alignment across teams

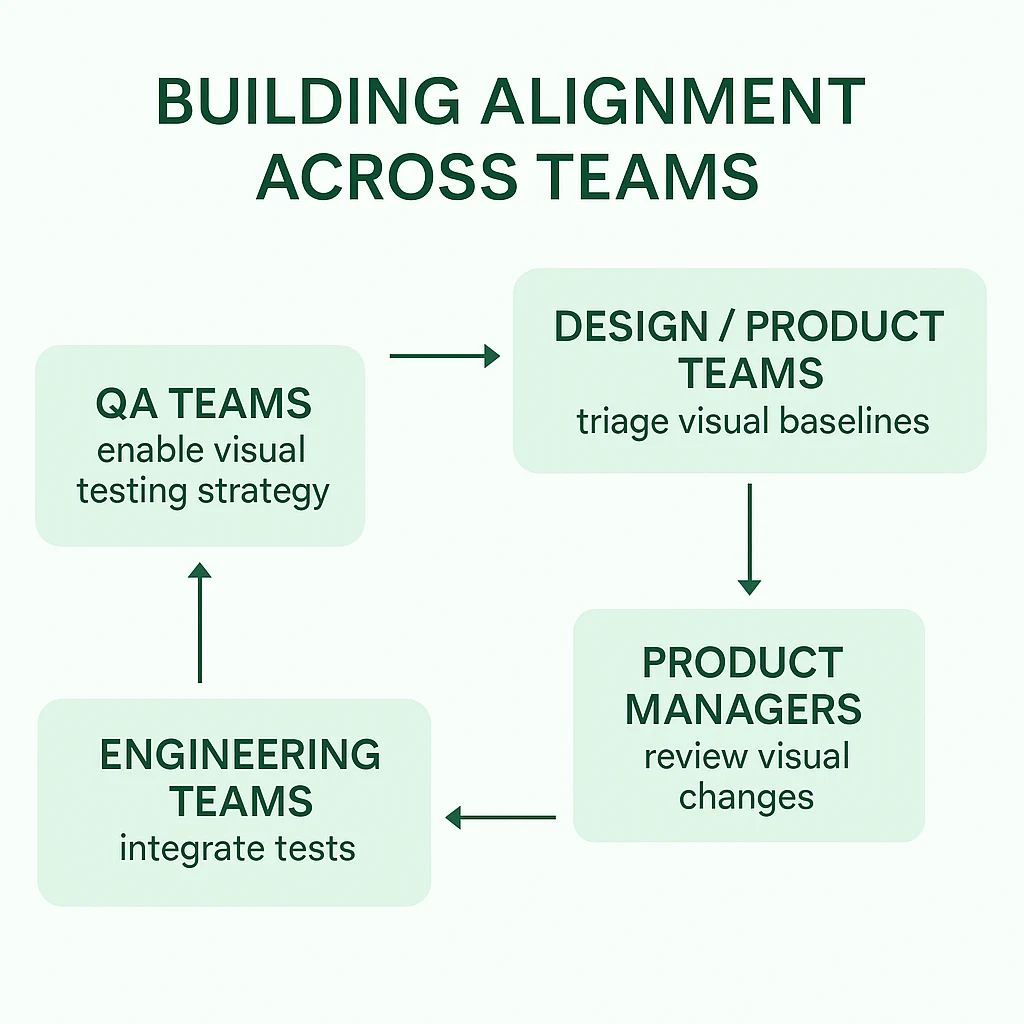

The need for cross functional collaboration is one of the most overlooked areas in visual testing. To be successful in visual testing, development, quality assurance, design and product teams must be aligned.

Who Leads What?

QA teams should be the enablers and champions of the overall visual testing strategy, managing tooling, and initiating test coverage.

Design/Product teams should own the initial triaging of visual baselines, validating that UI representations match expected designs.

Engineering Teams should help support integration into feature branches and ensure that the visual tests are authored and maintained.

Product Managers can help review visual changes for any business-critical areas.

Below are the steps quality assurance leaders need to take to be successful in their visual testing adoption:

Training and communication

Teams need to understand visual testing and its benefits. It is not a replacement for functional testing or design reviews; it is a tool to catch visual issues automatically. Share some examples of UI issues that visual testing can identify.

Baseline creation collaboration

It is important to work with UX and product teams and establish what the correct UI will look like. When the application substantially changes, it is important to get the product's approval of baselines.

Define ownership and process

To ensure visual testing is streamlined, clear ownership and processes need to be established right from the start. For example, to review baselines, both product and quality assurance teams can collaborate and approve the baseline.

After running cross-browser visual tests, any UI discrepancies, such as layout issues or font changes, should be captured through the automatic screenshots generated during test execution. These screenshots must be reviewed by product or quality assurance teams to confirm if they are bugs.

When the changes are confirmed as expected, teams must update the baseline to the expected screenshot identified earlier.

To make this process more streamlined:

QA reviews unexpected visual drift screenshots.

If they are identified as bugs, the QA team will create tickets in the tracking system and assign them to developers.

Escalate any unresolved discrepancies to the product team for further clarification.

By following this approach, visual consistency can be maintained. This helps prevent any delays or confusion during release cycles.

Common Challenges and How to Overcome Them

1. Flaky visual tests

Visual testing encounters an ongoing problem because of flaky tests arising from minor pixel variations. This happens often due to differences in dynamic data and rendering fluctuations between test environments. The tests can produce misleading results, resulting in team members' loss of trust in the test outcomes.

Such flakiness can be minimized through defined pass/fail boundaries and ignore regions for unstable elements. Thresholds can be adjusted to the acceptable level of visual difference, along with standardized rendering across environments, to ensure consistency in test results.

2. Poor cross-team alignment

Visual testing becomes challenging when different teams, such as QA, development and design teams fail to collaborate. When teams are not aligned on what changes to the user interface are acceptable, visual regression review delays occur because of unclear ownership responsibilities.

The success of visual testing depends on defining clear roles, such as QA managing the process, design product teams reviewing the outcomes from visual testing, and development teams integrating tests into the pipelines. Early communication, along with shared understanding, plays a critical role and serves as the foundation for success.

3. Inconsistent environments

When tests are executed on different screen resolutions, device types, or browsers, visual differences may arise. As a result, unnecessary test failures increase. Standardizing test environments by locking browser versions, resolutions, and font settings will help decrease environment-related noise and achieve stable test results.

4. Baseline management complexity

The evolution of applications makes it difficult to handle visual baselines across different branches and environments. Without proper versioning, teams risk incorrect baseline updates and overlooked regressions.

It is important to adopt a tool with robust baseline version control and approval workflows. This helps teams manage complexity. A more structured and disciplined process is essential for when and how to update the baselines for long-term success.

5. Adoption Resistance

Visual testing is often perceived as overhead or an optional activity. The development team might see it as redundant, and leadership may be reluctant to invest in it. The key to overcoming this challenge is to demonstrate value early. Show examples of visual bugs. Results need to be integrated into existing workflows to make this feel more seamless and necessary.

Final thoughts

Visual testing is no longer an optional practice. It has evolved from a nice-to-have to a core strategy for delivering consistent, high-quality user experiences. As applications become more dynamic and user experience-driven, ensuring visual consistency becomes as critical as verifying the functionality.

For a successful adoption, more than the tools, a mindset shift across teams is non-negotiable. When QA, development, product, and design teams collaborate with clear ownership, visual testing can become a seamless part of the entire delivery experience.

Start small, focus on high-impact workflows, reusable screens or components and brand sensitive areas. Standardize across branches and environments and scale gradually. When the right processes are deployed, organizations can identify visual issues proactively, improve confidence in releases and strengthen their overall brand.

Visual testing is not just about catching UI bugs. It is about delivering a reliable experience that reflects high-quality uses of expert and brand promise.

Testing tools like Sauce Visual helps QA teams find and fix visual errors and inconsistencies across web browsers, mobile web, and native mobile apps early in the development lifecycle. To learn more about Sauce Visual, take the product tour.